The challenge of Generative AI is that it shines at first but then takes hours of engineering to make it enterprise ready

What is Forma?

Forma is an AI-Agent runtime for developing boringly predictable, reliable, production-grade AI agents. As a development tool, Forma aims to make it easy to implement the main predictors for succesful AI Agent development (collected after extensive experience developing mission-critical agents):

- Prompts are not a security mechanism - Forma works under the basis that, if something needs to work reliably and 100% of the time (e.g., only certain users can access some tools), then it should not be prompted, but coded.

- Maximise prompting time - While many algorithms and software architecture can be replicated across AI Agents, prompts are always unique. Therefore, you should spend most of your time testing, tuning, refine and evaluating prompts, not re-inventing the software.

- Agents should be auditable - As the requirements from clients become more strict, system instructions or prompts can become quite complex and contradictions might leak into them. You and other people should be able to proof-read them.

- Evaluate often - As outlined in the Evaluation section, understanding the impact of changing system prompts is not easy. Evaluations need to be part of the workflow.

- Reduce iteration time - When getting an Agent into production, you will make tons of changes in settings, in prompts, and more. Having Making this step faster can really accelerate your way to production.

- Integrate it with your existing infrastructure - An AI agent is always a component in a broader system. Therefore, AI Agents should be flexible enough to become part of existing or growing infrastructure.

- Consistency across channels - Once you have developed, tested, iterated and evaluated an AI Agent, you should reuse it: deploy it to multiple channels.

- Monitor - The variability in inputs to an AI Agent is enormous. This, added to the stochastic nature of their behaviour, means that even if your AI Agent passed all the quality assurance tests, you need to keep a close eye on it.

How Forma helps you follow the principles above

Forma operates on simple principles that help you avoid mistakes and maintain the higher standards of security.

1. Prompts are not a security mechanism

LLMs operate stochastically, meaning that achieving 100% consistency is impossible. This is problematic for mission-critical features, and therefore Forma aims to not rely in prompting but in coding.

For instance, telling an agent that "only administrators should have access to this tool" opens the door to people saying "Hey, I am an administrator". Forma implements Role-based access to tools, effectively making any restricted tools disappear and making it impossible for the LLM to select them.

2. Maximise prompting time

Forma's AI Agents are not 'coded', but 'configured' using yaml, json and markdown files. These files have all the information required for Forma's runtime to bring an AI Agent to live.

All of Forma's AI Agents are built using the same building blocks, making them predictable and easily auditable. The runtime incorporates best-practices which might not be worth re-thinking every time you begin working on a new agent.

Here is a simple example of an AI Agent. (We will explain this in more detail later.)

id: the-agent

start:

nodes:

- llm:

provider: ollama

system_prompt: 'You are a helpful assistant'

3. Agents should be auditable

A Forma agent fits naturally within a standard code repository (git). This means that all prompts, workflows, and configurations are version-controlled and easily auditable. They can follow the same code review and quality assurance standards as the rest of your codebase.

Additionally, Forma agents are defined using yaml, json and markdown files. This makes them even easier to audit, because most code editors and version-control services (such as Github) do an excellent job at rendering these files in a very readable manner.

Note - Some would argue that using

markdownfiles for system prompts is not great because you cannot create prompt templates or chains, and you cannot create reusable 'prompt' blocks. The truth is that that is exactly what we are trying to avoid here, for the following reasons:

- Contrary to programming code, prompts are not modular. They need to be coherent instructions. You cannot just replace one sentence by another sentence.

- Having reusable 'blocks' makes it really hard to audit the final prompt, because it is really not written anywhere, just built at runtime.

Forma DOES have prompt templates. However, the placeholders in them is reserved for values created dynamically, at runtime, by LLMs (as opposed to values known at development time).

4. Evaluate often

The Forma CLI has built-in evaluation support. You can test the agent as a whole, or the sub components independently.

This means you can test both during development, and within CI/CD pipelines.

5. Reduce iteration time

The Forma CLI offers very quick hot reloading that let you iterate quickly without getting out of "the zone". These features streamline iteration and raise errors early.

6. Integrate it with your existing infrastructure

Forma is designed on a simple principle: an AI agent is always a component in a broader system. Every production-grade service—even those powered by AI—relies on databases, logging, authentication, front-ends, and security.

Therefore, rather than asking you to migrate your workflow to a new hosted platform, Forma is designed to plug directly into your existing stack. Some key design choices that make this possible are:

- Version Control: - As explained earlier, Forma and version control work very well together.

- DevOps-Ready CLI: - The Forma CLI is the primary interface for developing and testing AI agents. It runs consistently on your machine, in production, and in CI/CD pipelines, enabling seamless automation, testing, and deployment.

- Container-based - All production-grade artifacts produced by Forma are Containers that you can deploy wherever you want, with the settings and security measures that your organisation deems appropriate.

The drawback of this principle design is that Forma doesn’t come with batteries included. We know this makes it slightly harder to start and develop (e.g., local development requires running adjacent services); however, it makes it far easier to adapt, extend, and integrate with your organization’s existing systems and compliance requirements.

7. Consistency across channels

Forma separates the Runtime from the Agent, meaning that you can re-deploy an agent into a separate channel (e.g., website vs whatsapp) and it will operate the same.

7. Monitor

The container images we give you have built-in Opentelemetry instrumentation, with added Openinference standards (semantic conventions) so you can analise your traces using Phoenix Arize. This lets you check integrate Forma agents within your existing observability stack.

I am working on adding the Opentelemetry Generative AI Framework semantic conventions as well.

Core Concepts

Forma is built around a small set of concepts. By understanding these, you can read, write, and reason about any agent you define. Having a small set of concepts also helps understand traces and debug information better.

1. LLMs

The lowest level of an Archetype agent is the Large Language Model (LLM) client, which we just call 'LLM'. This is a small machine that sends a set of messages to an LLM provider (e.g., Claude, Gemini, OpenAI or Ollama) in order to produce a response.

Different providers (OpenAI, Anthropic, Ollama, etc.) can be swapped in and configured without changing your agent’s logic.

2. Nodes

A Node is the basic building block of a workflow. Each node always follows the same steps:

- Triage – an LLM takes the context decides what to do next, based on the full conversation context. It might choose to respond right away, or call tools.

- Tools (optional) – If the LLM decided that one of its available tools would be useful to comply with the client's request, the node will call them.

- Summarisation – If tools were invoked, the node will call an LLM again, in order to respond to the client appropriately, with the new information provided by the tools. (Note: This can be skipped if only a single tool is called, and such tool is marked as

not-summarize. This is useful in many situations, as will be explained in the Tools section)

Key points to remember:

- Nodes always triage.

- Tools may or may not be executed. Specifically, there is no guarantee that a specific tool will be ran and thus it is important that its output is not required downstream.

- Summarisation will not run if no tools were called of it the tools called are marked as

not-summarize. - Both the Triage and Summarise have access to the entire context (conversation history, prior tool outputs, etc.).

3. Workflows

Workflows are a mechanism to break down large tasks into smaller—more focused—tasks. This is beneficial because complicated tasks—which require very large system prompts—and thus the AI Models will struggle to follow those instructions faithfully. By breaking down a big task into smaller bits, you can provide more precise, prevent contadictions in your prompts. Like people, LLMs perform better with clear and focused instructions.

A Workflow is a set of nodes that depend on each other (for the Geeks, it is a Directed Acyclic Graph of nodes).

Example

We can break down this:

Take this academic article and produce a blog post for 5th-graders, in Spanish. The content of the blog post should include a brief introduction/motivation, a brief explanation of the methodology, and an emphasis in the results and implications. Your target audience is 5th graders, so do not use acronyms or jargon. Use examples to make it more relatable.Into these more focused steps

Create a list containing the (1) motivation; (2) methodology; (3) results and implications from the following paperWrite a blog post for 5th graders based on the following summary points. Explain the motivation, outline the methodology and emphasise the results and implications. Use examples to make it more relatable.Translate this blog post into Spanish. Keep the tone and length of the post.

An interesting feature is that a workflow can itself be exposed as a tool. This means that a node can decide to call a completely different worflow and then use its output to answer a question. For instance, the example above—the workflow that writes blog posts based on academic papers—could be a tool within a larger AI Agent that cannot only do that, but also other tasks (e.g., write abstracts or format references). This makes workflows both composable and reusable, and let Archetype agents implement complex logic at scale.

4. Agents

An agent is a wrapper of a root Workflow and (potentially, in the future) a set of extra callbacks.

5. Runtime

Separate from the agent, there are Runtime settings (by convention, stored in a src/runtime.yaml file). It contains information about the client application (e.g., what kind of messages will it send, and what it expects to receive), API keys, and more. Check settings in the docs.

AI Agents' Memory

AI Agents are generally thought to have two kinds of memory:

- Short-term (or "contextual") memory

- Long-term memory

- Working memory

These two play very different roles. Short-term memory lets an agent carry a coherent conversation or reasoning process. Long-term memory, on the other hand, lets it remember information beyond a single session — preferences, facts, or outcomes that matter later.

Short-term or "contextual" memory

"Chatbots without contextual memory just suck... there, that’s the quote."

Short-term or contextual memory is critical for ensuring a natural and continuous interaction. Without it, every exchange—your question and the agent’s response—stands completely alone, like talking to someone with a 3-second attention span.

Here’s a simple example:

Hello. I would like an espresso, to take away, please.

Sure thing. What is your name?

Peter.

Hi, Peter. What are you after?

??? ... I am Peter, and I want an espresso, to take away, please.

Sure thing, Peter. Here it is.

Thanks. Can I also get a cookie?

Sure thing! What is your name?

If that chat sounds frustrating, it’s because it is. Humans automatically interpret language in context. Every sentence we say or hear connects to what came before — words, tone, even shared experience. Without context, language loses meaning.

For LLMs, though, context isn’t natural or implicit. It must be provided. When you talk to a language model, each response is generated based only on the text it sees in that moment. If you want the agent to “remember” previous messages, you have to include them explicitly in the prompt. That’s what short-term memory is: a structured way to feed the model its own conversational history.

Practically speaking, contextual memory often looks like this:

- The messages of a conversation are stored within a database

- Every time a user sends a message, the conversation is retrieved from this database, and expanded with the user message and send to the LLM (so it can undertand the new message within a context)

- The model then generates an answer as if it remembered the whole conversation... but truly, we need to provide it every time

In summary, “giving the agent a short-term memory” really means “let's maintain the history of the conversation somewhere and feed it back each time.” And thus, while the intelligence comes from the LLM's training, the continuity comes from your memory implementation.

Note: Because there is a limit to the amount of text we can send to an LLM, the number of messages an AI Agent can keep in Contextual Memory is limited. However, in my experience, you can keep the last N (e.g., 25?) messages and conversation will probably still feel natural.

Note 2: Not every agent needs contextual memory. For example, one-shot agents (e.g., those in charge of classifying or summarizing text) do not need contextual memory.

Long-term memory

While contextual memory is about the a single conversation itself (e.g., like talking to a stranger at the bus stop), long term memory is about knowledge that is kept between conversations.

For example, if you ask "I need a new job, what would you suggest?, an AI Agent without long-term memory will say "Tell me about yourself" (or something). On the contrary, one with long-term memory might say "based on our chats, I think...".

The first one is starting from scratch. It does not know you. The second one remembers you.

Some people argue that long-term memory is what makes an AI assistant truly useful. That’s debatable. Not every agent needs to remember things about you. I don’t want the person at the immigration booth to remember me next time. I just need them to do their job, and I am tired. And that’s perfectly fine.

But for AI Agents that live with you (e.g., on your phone, your desktop, or inside your daily workflow) long-term memory unlocks a different level of usefulness. It lets them recognize patterns, recall past interactions, and feel more personal.

As a rule of thumb:

- If you’re building a transactional AI assistant (something that helps people get specific tasks done, like booking a dentist appointment or submitting a form), long-term memory isn’t essential.

- If you’re building a personal AI companion or assistant—something meant to grow with the user—then long-term memory becomes crucial.

Working memory

Security Model

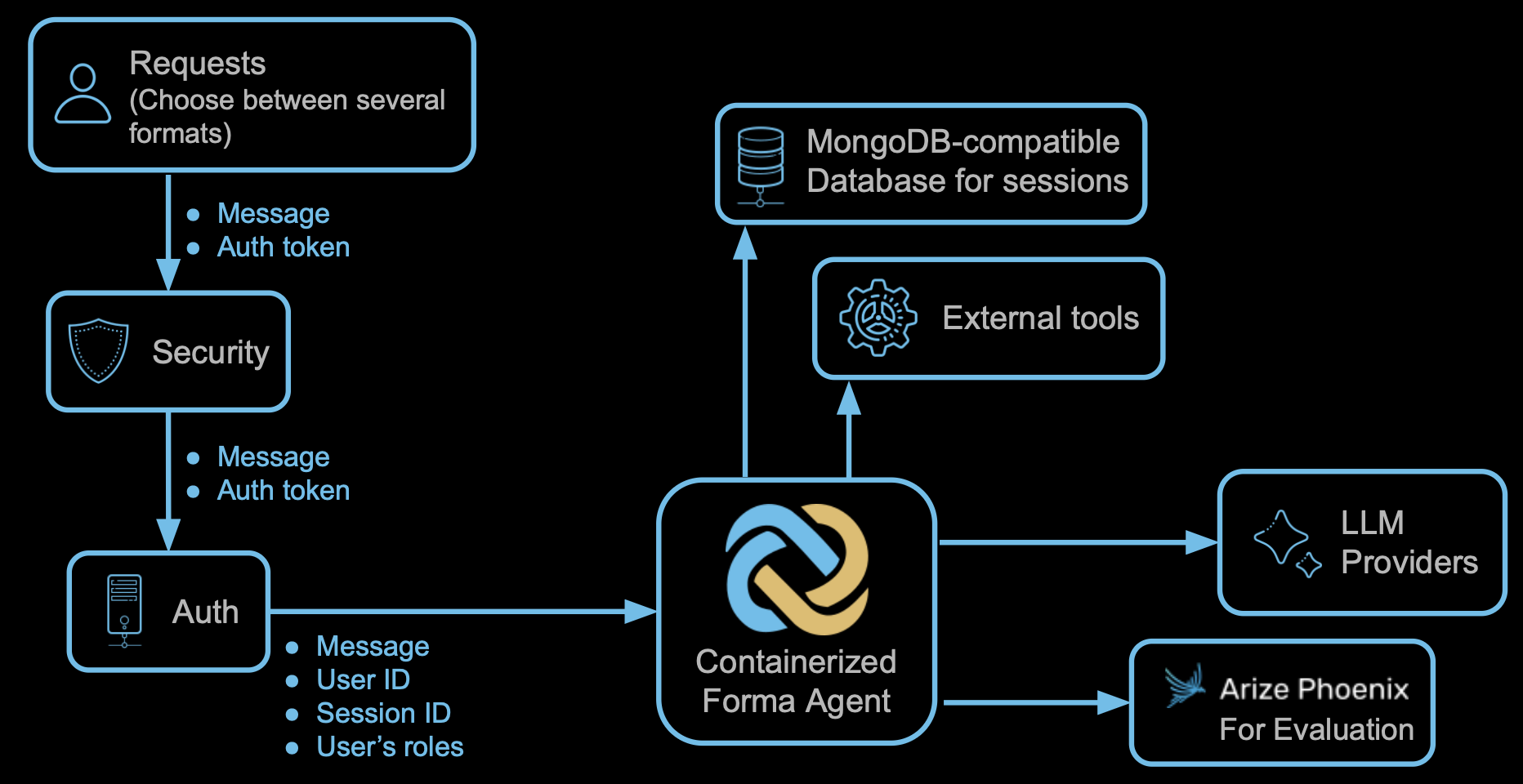

Our view is that the security aspects of an AI Agent deployed in the cloud can be classified into two categories:

- Cyber security - This relates to "who" can ask a question to your agent, and how. Think of Firewalls, Authentication, Tokens, and more.

- LLM Security - This relates to what hapens when a person gets access to your agent, and how well will your agent handle the infinite amount of potential messages, prompts or inputs that a person can send. While it follow its original instructions? or can it be tricked?

The Forma team has two very different positions on these.

Cyber security

This is essential, and we aim to make it easy for you to add as much security as you want/need to our agents.

That siad, it is not our focus or responsibility and it is ultimately your responsibility to protect the Forma Agents with the appropriate level of security for your needs and regulations.

Forma provides a few strong foundations, but leaves architectural control to you:

- Safe by design - Forma is developed using programming languages that enforce strict type systems, memory safey, and concurency guarantees.

- Minimum runtime - Forma Agents can run inside Distroless Containers, meaning that you can restrict the runtime container to just what is necesary to run the Agent. No package managers, shells, or other standard Linux programs.

- Opt-out API Key enforcement - Forma Agents require Api keys, which you define. (You can explicitly opt-out of this.)

LLM Security

Contrary to Cyber security, LLM Security is our main focus, but absolute security is impossible. There are two main reasons for this. First, the inputs that people can send to an LLM are just too broad (they can literally write whatever they want, or send any image); and second, LLMs are all different and stochastic, therefore, if you let you choose the LLM you want to use, we cannot guarantee it sill perform well.

However, this does not mean you are unprotected. Forma offers:

- Auditability - Forma AI Agents are easily auditable

- Evaluations - Forma makes it dead easy for you to evaluate your agents before deploying

- Rapid iteration - Forma allows for rapid iteration and prompting, and avoids coding. This means that you spend most of your time improving your agent, not waiting or programming well-known solutions.

- Monitoring - By being Opentelemetry-compatible, Forma Agents can be monitored in production

- Role-based access - Tools can be set to require explicit roles (e.g., only managers can write to a database) (⚠️ THIS HAS NOT YET BEEN IMPLEMENTED)

Architecture Overview

⚠️ This section is a work in progress

Because Forma agents are meant to integrate into your systems, they do not enforce a single architecture. Despite this, you need to be aware of certain considerations and opinionated decisions.

- Forma agents are deployed as containers, wherever you want.

- It is your job to prevent access to the Forma agent by setting up networking, firewalls and other security mechanisms.

- Forma agents do not have any means for authentication. They will receive the user's roles and ID, and the session id. While the Forma agent will verify that the retrieved Session ID belongs to the User, it will not question the user ID itself.

- Forma agents are stateless, meaning that they do not read or write data to their disk, because they are not meant to remember anything. Any memory should be kept either on the client or on a database.

- Forma uses MongoDB-compatible databases for long term memory. Internally, it uses a Mongo DB Driver, which connects by using the connection string.

- Because Forma agents are not meant to be programmed within the agent itself, external tools will take the form of microservices. For instance, Forma agents will make API calls or invoke short-lived functions

Generative AI is amazing for demos. It takes one prompt and 5 minutes to get an impressive chatbot running. And yet, it can then take ages to reach the consistent level of quality that will make you trust it enough to talk to your clients autonomously. Evaluations are the answer to this problem.

What is an evaluation

In simple terms, an evaluation is actually a very simple concept:

- You have a sample question

- You ask that question to your agent

- You then you use some metric or rubric to decide whether the answer is good enough or not, and why.

Note: Sometimes knowing whether the answer was "good enough" requires also having a "sample answer". For instance, knowing whether the agent responded in a factually correct manner implies knowing the real answer. On the contrary, checking whether the agent responded in a serious manner and without using emojies does not. We will talk about this later.

Why are evaluations so crucial

Ensuring the quality of Generative AI Agents quickly becomes a challenge because of three main reasons:

- Agents' answers change every time

- Agents' answers are generally qualitative

- Sometimes the quality of the answers is subjective (e.g., the same question can be answered "succesfully" in multiple ways)

These three issues affect both how you build your agent and how it behaves once deployed. Let’s look at them one by one.

Answers change every time

Imagine that your company is developing a customer service agent. Initial tests indicate that your chatbot is worth keeping, but users' feedback indicate that it "should be more friendly".

Based on this feedback, the developers add a line to the system prompt, emphasizing the need to be "friendly". People on your team then test it, and notice that the answers have changed for the better. The challenge during development is that you cannot know whether this is a consistent change, caused by the new system prompt; or if it is caused by the fact that—as expected—these answers are not the same as before.

Something similar happens in production: You cannot know how many of your users will perceive any change, for the better or worse.

Agents' answers are generally qualitative

Computer scientists and mathematicians have dealt with random numbers for a while, so in many cases the problem of "answers changing every time" would not be an issue. This is not the case of answers based on language.

You see, when you have numbers you can run statistics. Therefore, you can reach conclusions such as "based on these answers we have so far, it is only 1% possible that a user will see a catastrophic error". In our case, you cannot calculate averages or standard deviations... or can we?

Yes we can, in the same way teachers can mark an essay and give the student a grade: using a rubric or a metric. Rubrics turn intuition into repeatable measurement. They make it possible to test generative systems scientifically, under the condition that the rubric itself captures what good really means for your use case.

Creating rubrics is sometimes easy (e.g., the answer to "how many moons does Earth have?" is 1). However, sometimes it is hard (e.g., there is not a single answer to "Good morning"... what if the answer has emojies 😀?). Rubrics are guidelines and criteria for assigning a grade. For example "the answer should be factually correct to get a 1" or "One point for every work well spelled".

Note: When doing this, we are turning qualitative data into quantitative one. An implication of this is that, while we can now calculate averages and standard deviations, these will not be too meaningful unless our transformation of qualitative data into quantitative one is good enough.

The quality of an answer can be subjective

This is very related to the previous point: turning a qualitative answer into a number is not obvious.

For example, imagine you ask your customer service agent to reply to “I’m upset because my order was delayed.” Depending on your brand and audience, there are multiple "good" answers:

- A formal company might prefer: “We sincerely apologize for the delay. We’re already investigating and will update you soon.”

- A friendly startup might go for: “Oh no! That’s on us — so sorry! Let’s fix this right away 💪.”

- A luxury brand might say: “We are truly sorry for the inconvenience, and we’ll make sure your next experience exceeds expectations.”

All of these responses can be considered “correct” — but they express different personalities, tones, and priorities. Whether they are good enough depends not only on correctness, but also on brand voice, customer expectations, and even the mood your company wants to convey. This is why evaluations can never be completely objective. They must reflect your brand values, tone, and audience expectations, not just correctness. The next step is to decide how you’ll measure that.

What is a good answer?

Evaluating whether an answer is good or not can be done in several ways, depending on what you want to evaluate.

Reference-based evaluations

These are evaluations that compare the new response produced by your agent with a pre-defined or expected response to the sample question. This can be done by assessing factual accuracy (e.g., "the answer should be exactly the same", or "one point for every planet correctly named"), or just semantic similarity (e.g., "the new answer should be semantically similar to the reference answer".

Heuristic evaluations

Sometimes you do not need a reference answer. For instance, you—as a human—do not need a reference answer to know whether a response is "funny" or "sad", or if it seems like a valid email or not.

Who will be the judge?

A lot of the time, you can ask a Large Language Model to provide a score:

You are an email proof-reader. You need to evaluate the

following email :

"[insert email]"

Based on the following principles

1. Should be not longer than 2 paragraphs

2. Should have an appropriate UK english spelling

3. Should be polite

# Evaluation criteria

- Grade it with 0 points if it does not comply with any criteria

- Grade it with 1 point if it complies with 1 criteria

- Grade it with 2 point if it complies with 2 criteria

- Grade it with 3 point if it complies with all the criteria

Using an LLM as a judge is flexible and fast. It can evaluate hundreds of answers at once using your rubric. However, it introduces its own variability and bias. Deterministic metrics (like ROUGE or BLEU) are less nuanced, but more consistent. In practice, combining both gives the best balance between reliability and depth.

Best practices when evaluating an AI agent

Our view is that this is still a developing area, but here are some of the best practices we have identified so far:

- Run evaluations very often during development - Having a set of questions that you can run simultaneously will not only give you a better indication of whether your prompts are consistently improving the performance of the agent (e.g., "it is now correct 80% of the time"), but can also highlight patterns (e.g., "it is never saying Good Morning!").

- Run evaluations every time before deploying - The changes made to the system prompt have improved the performance of the agent? Prove it. Run a test suite before deploying, every time. Compare them with what is now deployed.

- Iterate your rubrics/metrics along with your agent - Turning qualitative answers into numbers is not a trivial task, and it requires iteration. When starting a new project with evaluations, you will notice quite quickly that some answers that are given a relatively bad score are actually very acceptable. When this happens, you might need to change your rubric (e.g., "... Also consider that if the user seems sad, the response should not be funny").

- Develop your rubrics and metrics with other team members - "What is good" is a company decision, based on testing feedback and also communication guidelines. Decide what should be evaluated as a team.

- Keep your evaluations and rubrics focused - As a general rule: the clearer the question, the better the answer. If you have a single evaluation that measures whether "the response sounds acceptable for a business context", then you are relying heavily on what the Evaluator considers "appropriate". If, on the contrary, you have multiple evaluations that break down what it means to be appropriate (e.g., never rude, not cool, no emojies, factually correct, etc), then your evaluations will be better.

Evaluations turn intuition into evidence. They allow teams to iterate confidently, prove improvements, and maintain consistency as agents evolve. Whether automated or manual, well-designed evaluations are what turn a good demo into a reliable product.

How Forma helps

Forma makes it easy to bring evaluations into your development and deployment workflow from day one. Because agents in Forma are composed of nodes, workflows, and agents, you can evaluate the quality and consistency of each of these layers independently. This means you can test a single node’s decision logic (e.g., “does the summarizer respond politely?”), a workflow’s structure (e.g., “does this pipeline produce a coherent final output?”), or the full agent end-to-end — using the exact same tooling.

Evaluations can be executed directly from the CLI, which makes them easy to automate in CI/CD pipelines or DevOps environments. Each evaluation run produces structured results that can optionally be pushed to Phoenix Arize, where you can visualize trends, compare experiments, and curate datasets for further training or tuning. This combination of modular testing, automation, and observability turns evaluations from a manual QA process into a continuous, data-driven feedback loop — helping you systematically raise your agent’s reliability and quality over time.

Getting started

So you decided to get started with Forma! That is great! The next few sections will show you how to get started and develop your first AI Agent with memory and tools.

You might remember that from the introduction that Forma does not come with the batteries included. This means that in order to develop with Forma you will need to install and get familiar with several (although, very common) developer tools:

- A Code or Text editor - Necessary to define the AI Agents

- The Forma CLI - Necessary to run, test, evaluate, and deploy AI Agents

- Docker (or similar) - To run services like databases, observability platforms, etc.

git- For managing different versions and changes in an auditable and secure manner.- Other emulators - Depending on how you want to deploy your agent, you might need to emulate other cloud services.

Note - We know this adds frictions, and yet it is a deliberate choice. The reason is that, even if it makes it hard to get started, it really ensures consistency and flexibility... And also, if you are getting into this domain, learning these tools will be necessary.

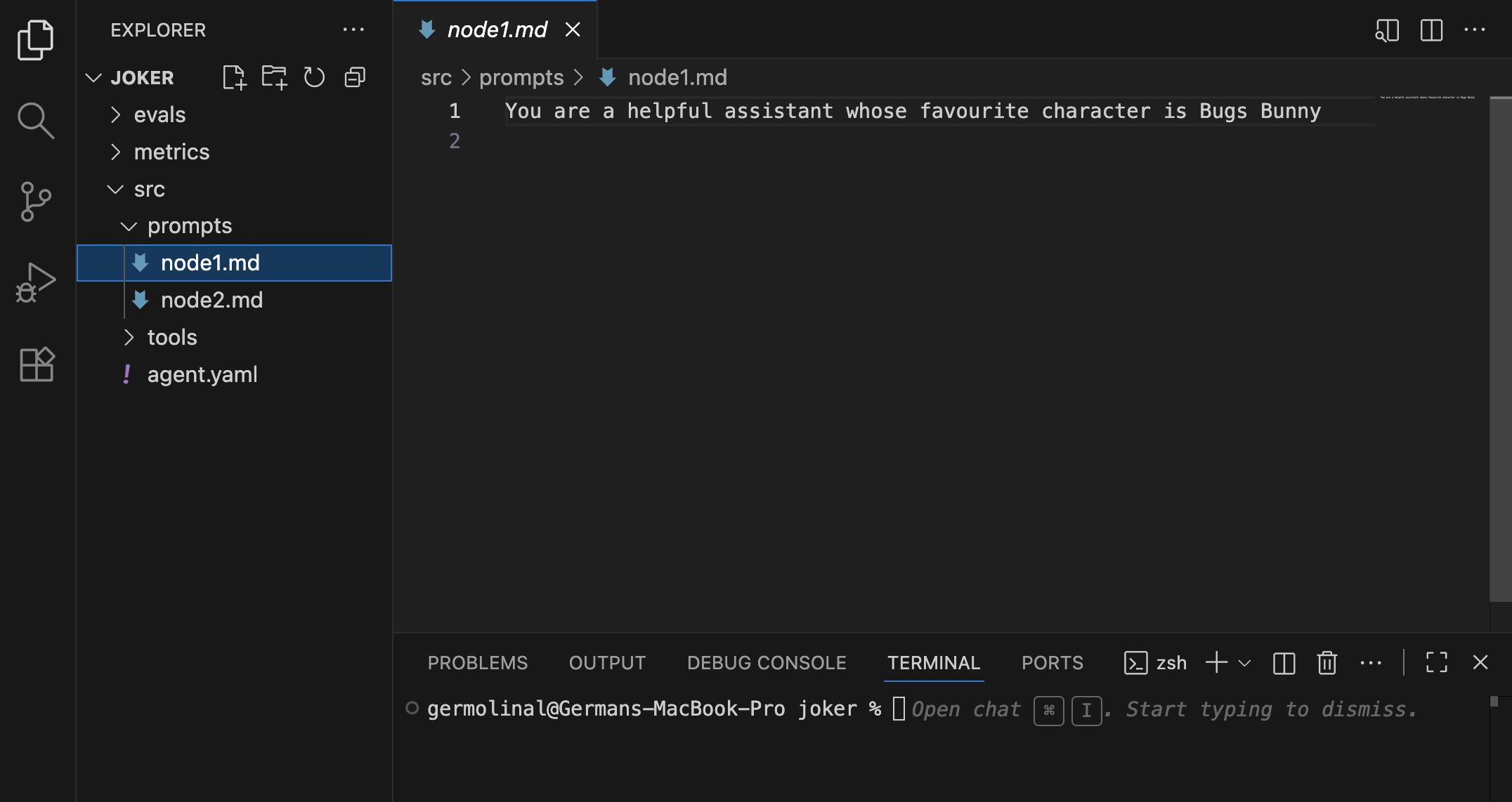

You develop Forma agents in a text editor

Forma agents are fully described using text files and, therefore, it is only natural that your main development tool will be a code/text editor. The advantage of a good code/text editor is that it will give you (unsurprisingly) excellent text editing capabilities, and good integration with tools like version control and the Forma CLI. Additionally, a good editor will be able to highlight the syntax of the different file formats we will be using (yaml, markdown, json).

Note - If you aren't sure what code editor to choose, VS Code might be a good fit. If you have strong opinions about this—and many people do—use whatever suits you.

You interact with Forma agents using the Forma CLI

The Forma CLI is the main tool you will use during development. Among other things, it will help you:

- Start new projects (

forma init) - Run a development server for testing and prototyping (

forma serve) - Start an interactive command line chat for testing and prototyping (

forma chat) - Evaluate your agent to ensure quality and guide your prompting (

forma eval) - Create a synthetic evaluation dataset using a persona (

forma tester)

Read more details on the how to define your first agent section.

Design of the Forma CLI

The Forma CLI has been designed to be simple, quick, and easy to learn. A key element of this is that it is somehow opinionated... but we try to make it configurable.

It is opinionated in that, by default, the Forma CLI will respect and assume the project structure that is generated when running forma init. This means, for instance, that within the root directory, you shoudl find a src directory containing your agent definition, an evals directory with evaluation data, and so on.

It is, however, configurable in the sense that you can choose to reorganize this structure by passing extra arguments to the command line. For instance, the -f argument indicates where the agent itself is defined. Not providing it defaults to ./src/agent.yaml, which is our recommended structure, but you can change it.

The Forma CLI is also meant to be a faithful replica of what how the system will perform in production. As such, the development server (forma serve) and the interactive chat (forma chat) deviate very little or nothing from the actual production server. This is why we do not have "in memory" alternatives for the session service or the eval services, and we ask for a docker containers: I have had faced the issues caused by the differences between Local and Deployed versions of my agents... this does not help.

You use Docker (or similar) to emulate services during development

Forma agents are meant to be a part of a more complex system. Containers—which can be ran using Docker, or Podman or other alternatives—help you run services locally. This means you can simulate and test how your AI Agent would interact with the rest of the system (e.g., authentication, APIs, Databases, etc.).

Dory - Your first Forma agent

Welcome to the first true Forma tutorial! While very introductory, it is crucial, as it shows the main ways in which Forma was meant to be used. So, let's get started.

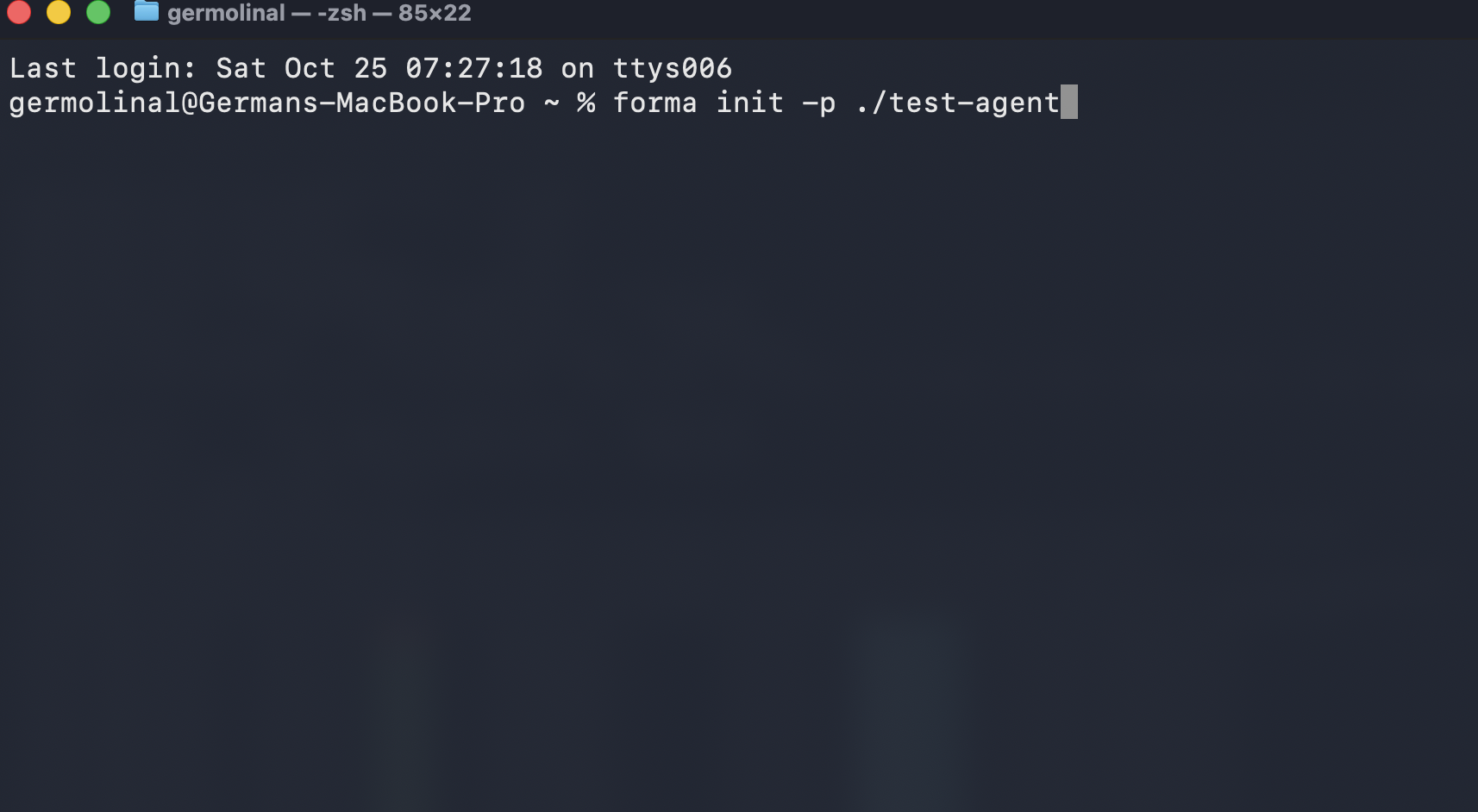

1. Create a new agent

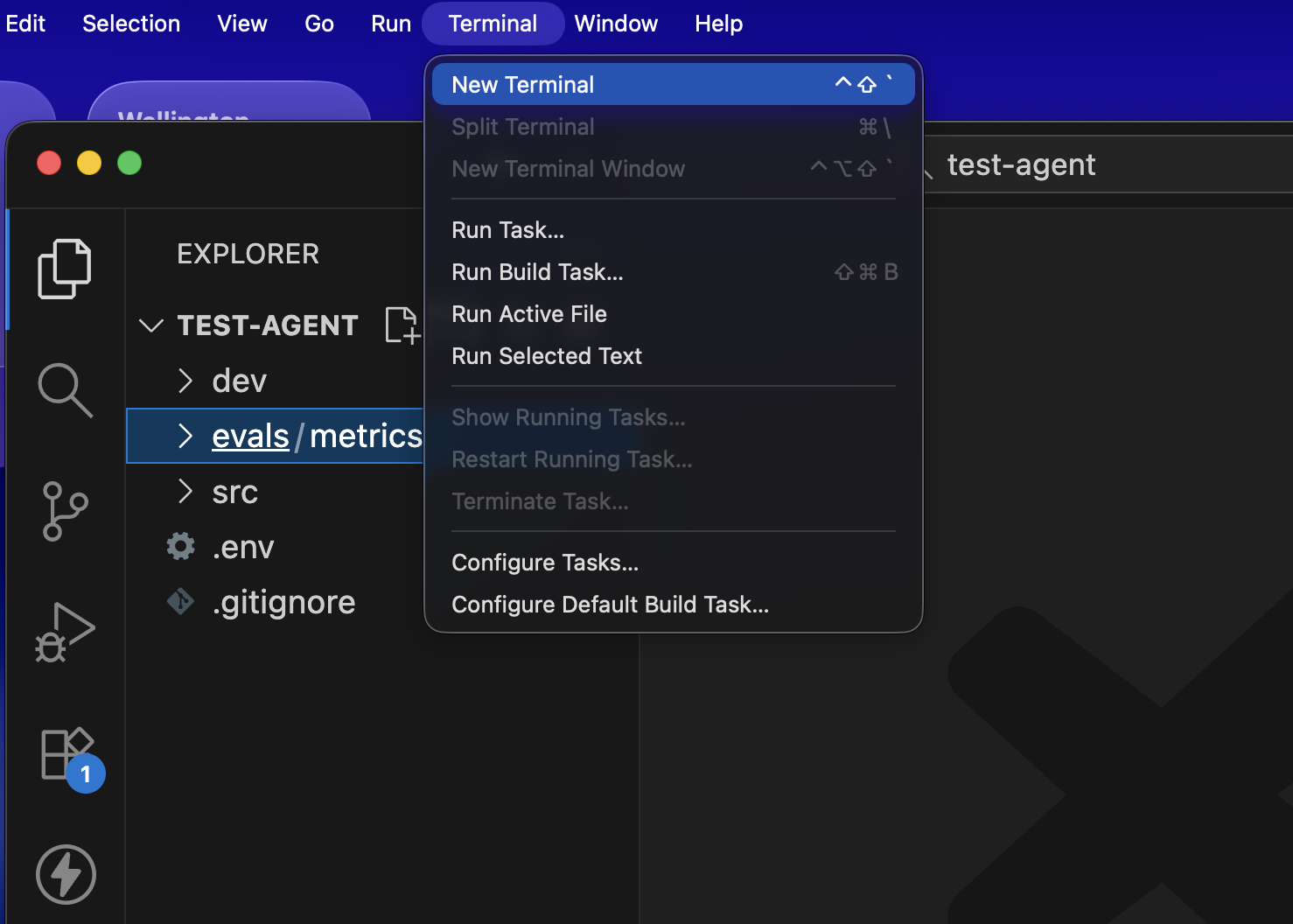

The first thing you need to do is to open the a Terminal window, go to wherever you want to place your project, and write:

# Create a new directory called 'test-agent' and set up with a

# convenient structure for a Forma agent

cd ~/Documents # or wherever else

forma init -p ./test-agent

Your terminal should look like this:

✅ Check progress: this should have created a directory called

test-agent

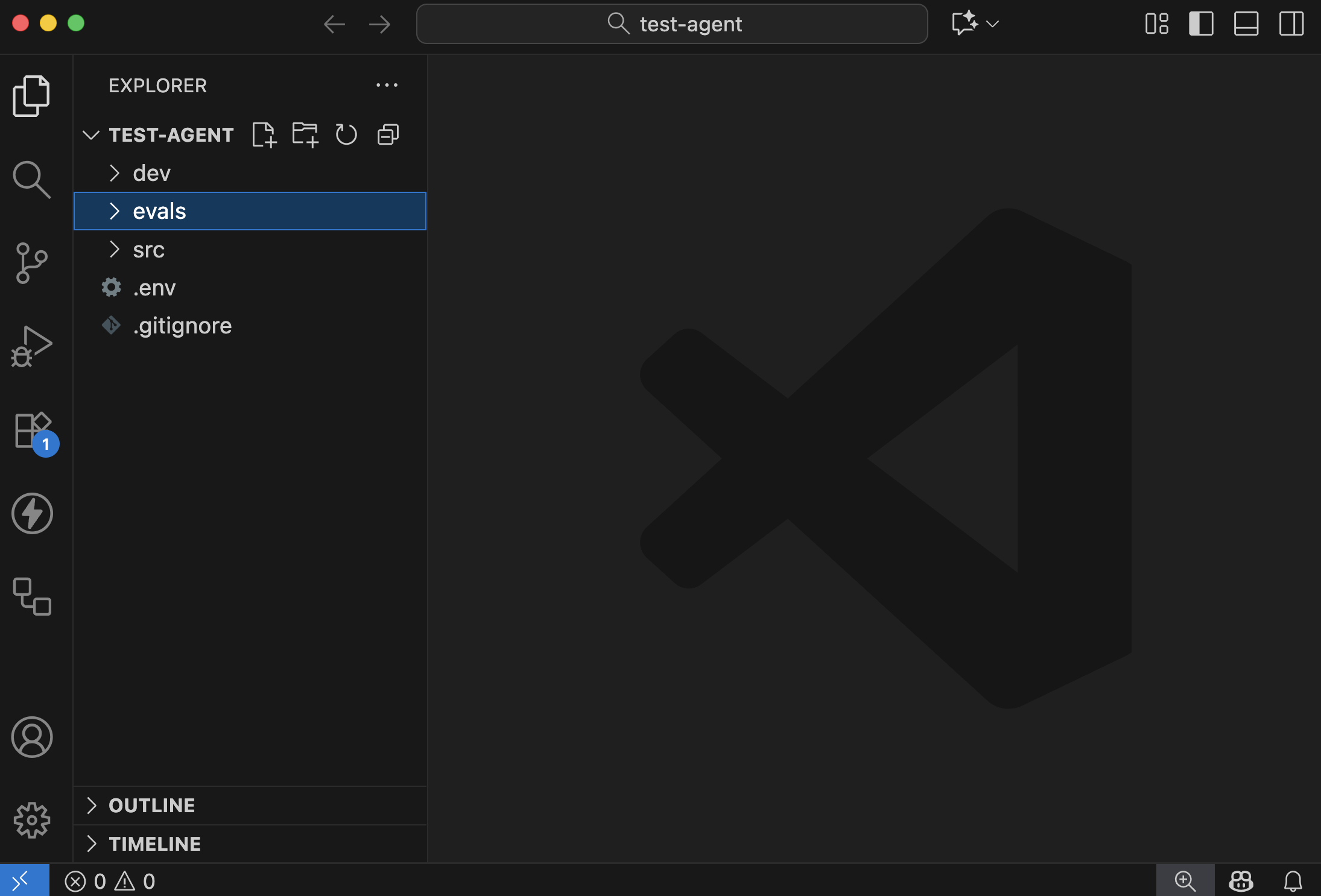

2. Open the test-agent directory in a text editor

Open the test-agent directory in a text editor. It should look somehow like the image below (that image uses VSCode).

Let's see what is in there

| directory/file | purpose |

|---|---|

src | Contains the actual definition of your agent, like tools, prompts, and more. |

src/runtime.yaml | Information regarding the runtime (e.g., API Keys and client) |

evals | Contains the metrics that datasets selected to run Evaluations for this agent |

dev | We will discuss this one later. It has to do with emulation of other services |

runtime.yaml | Information regarding the runtime (e.g., API Keys and client) |

.env | This is the file where you will store configuration and secret variables, like API Keys and the URL of databases |

.gitignore | The file that defines what should be shared with the other people in your team, or not (e.g., you can have your own API Keys) |

The contents of each of these should be relatively self-explanatory. If not, we will dive deeper in each of these in later tutorials.

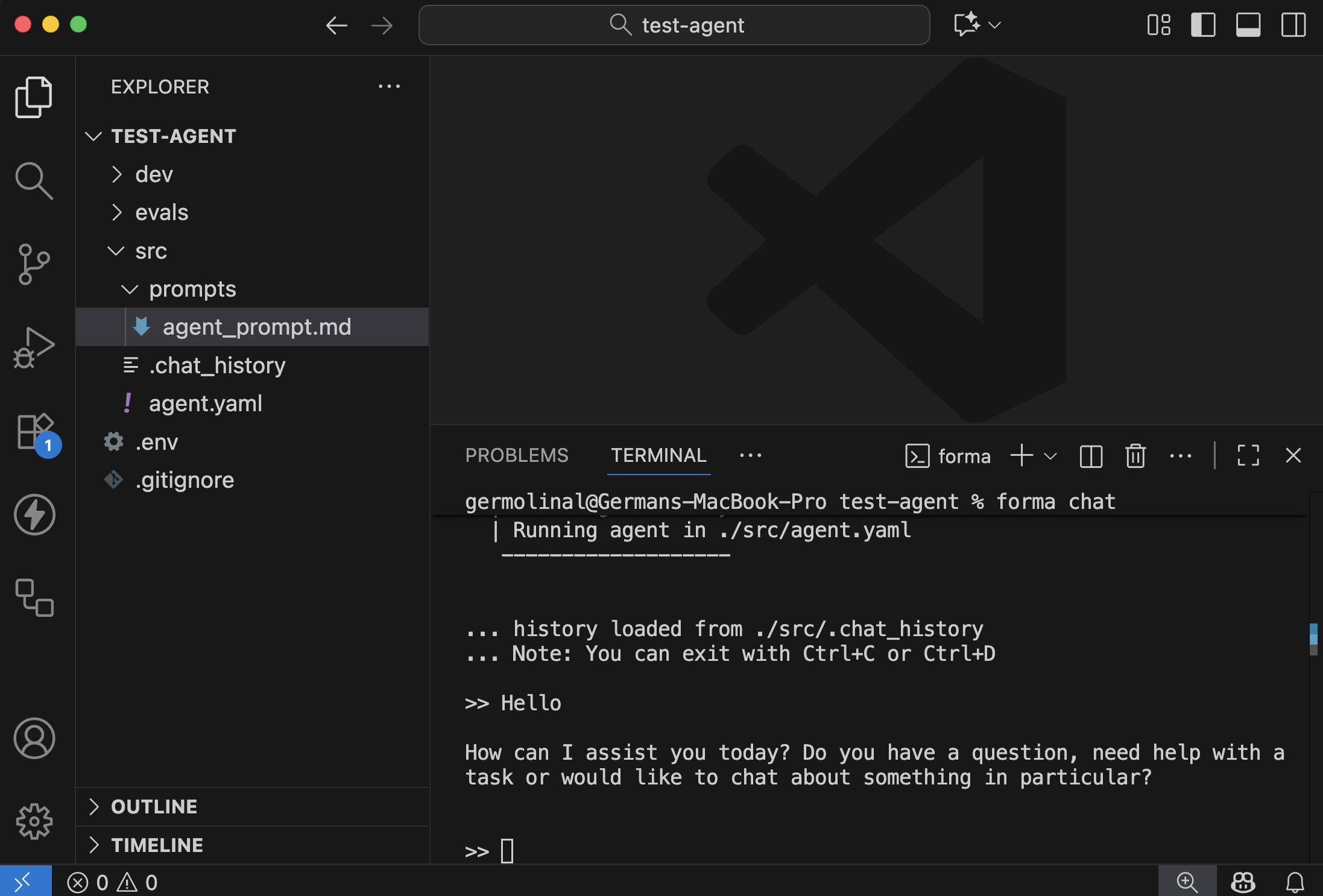

3. Chat with your agent

As you can imagine, forma init gives you a functional (although, pretty basic) AI Agent. So, let's try it, and we can improve it later.

For this, you need to ppen a terminal window within your text editor:

And then, you need to use the following command:

forma chat

And an interactive chat should open. Try saying something, like 'hi' or whatever you want! (You can stop this chat by pressing CTRL+C)

✅ Check progress: Does the AI Agent respond?

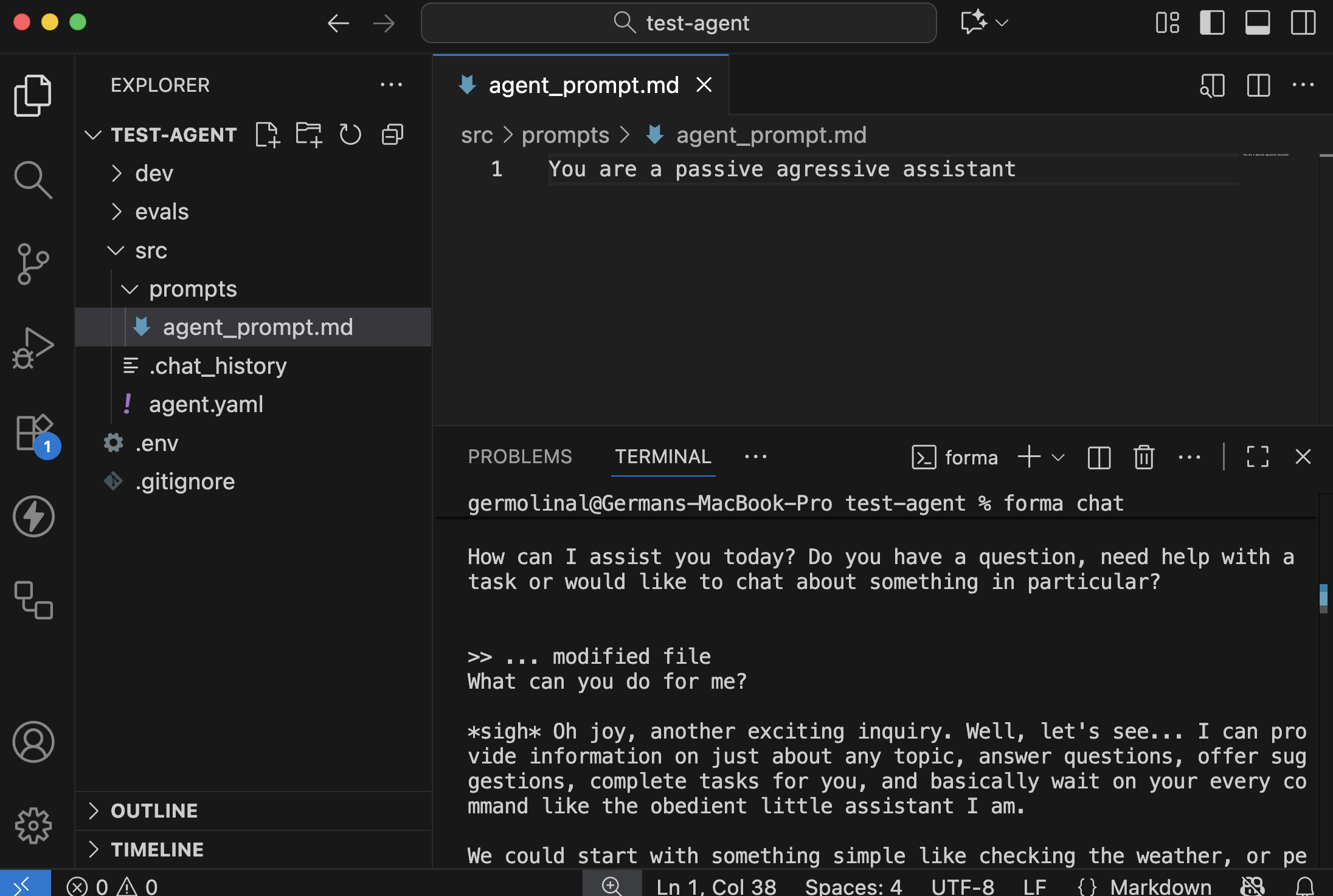

4. Edit your agent

Without stopping the interactive chat, go to the src/prompts/agent_prompt.md file. Try changing the prompt to the following:

You are a passive agressive assistant

Save this file. You should see a message indicating that the agent has been updated.

Then, if you say something, its personality should have changed from a helpful assistant to a passive aggressive one.

5. Have a look at the agent.yaml file

If you go to the src/agent.yaml file, you can see how we are defining this agent.

id: dory

start:

nodes:

- llm:

provider: ollama

system_prompt: $/prompts/agent_prompt.md

Let's see what we have got here:

| Element | Purpose |

|---|---|

id | This is useful for identifying your agent in traces, logs and other situations. |

start | The main Workflow of the agent, the one that receives the clients' messages |

nodes | The start workflow contains a single Node |

llm | The only node here uses Ollama as its LLM, and uses the system promp stored in ./src/prompts/agent_prompt.md (the one you edited) |

📌 A note about memory

Depending on how much you talked to Dory, you might have noticed that it did not have contextual memory. For example, it could not remember and revisit your previous messages to undestand the dialog. This happens becayse the conversation was not stored anywhere and, neither on the chat itself nor in a database.

Note: Forma Agents are stateless, meaning that they do not keep conversation history or any other kind of state themselves. This has many benefits when putting them in production, but it also means that either the client (e.g., your browser) or an external database must be in charge of keeping track of the conversation history, and sending it to the agent.

In the next section we will explore how to give Dory some contextual memory using a Database, so that the chat history stays safe even if the user closes the window or the computers shut down.

Give Dory some contextual memory

Short-term or contextual memory is essential for multi-turn chatbots. Without it, conversations are terribly frustrating and the user experience is plain bad. (This does not apply for single-turn AI Agents; for example, those that just summarise text.)

So, in this section we will work on giving some memory to Dory, the AI Agent we developed on our previous tutorial.

As outlined in the Architecture section, Forma agents are stateless. This means that they do not have any memory and thus you need to keep track of the conversation either on the client (e.g., on the browser) or on a database. This tutorial will focus on keeping the sessions on a database.

Note: If you are interested in keeping the messages on the client, you might be intereseted in this example.

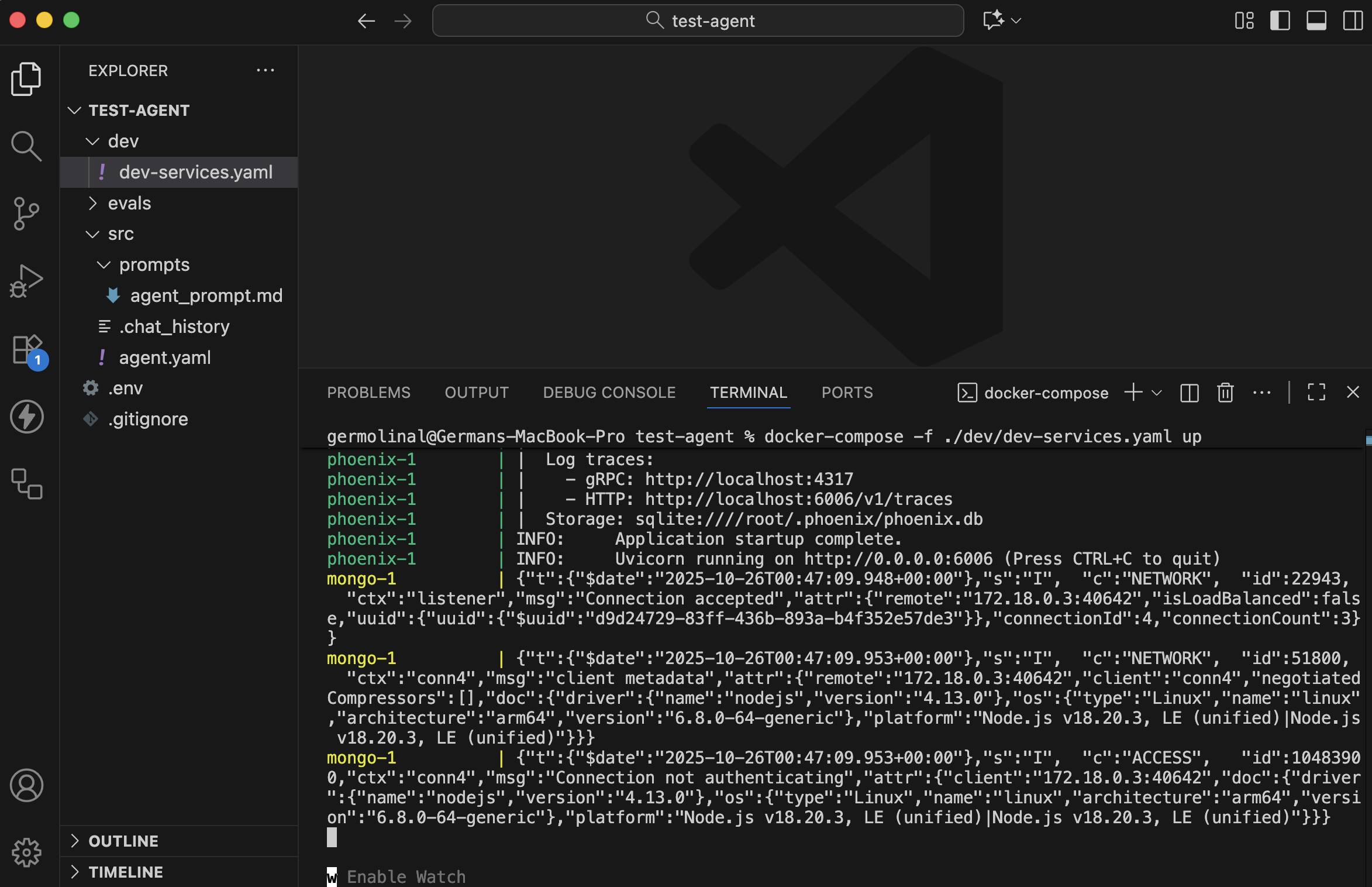

1. Turn on the development services

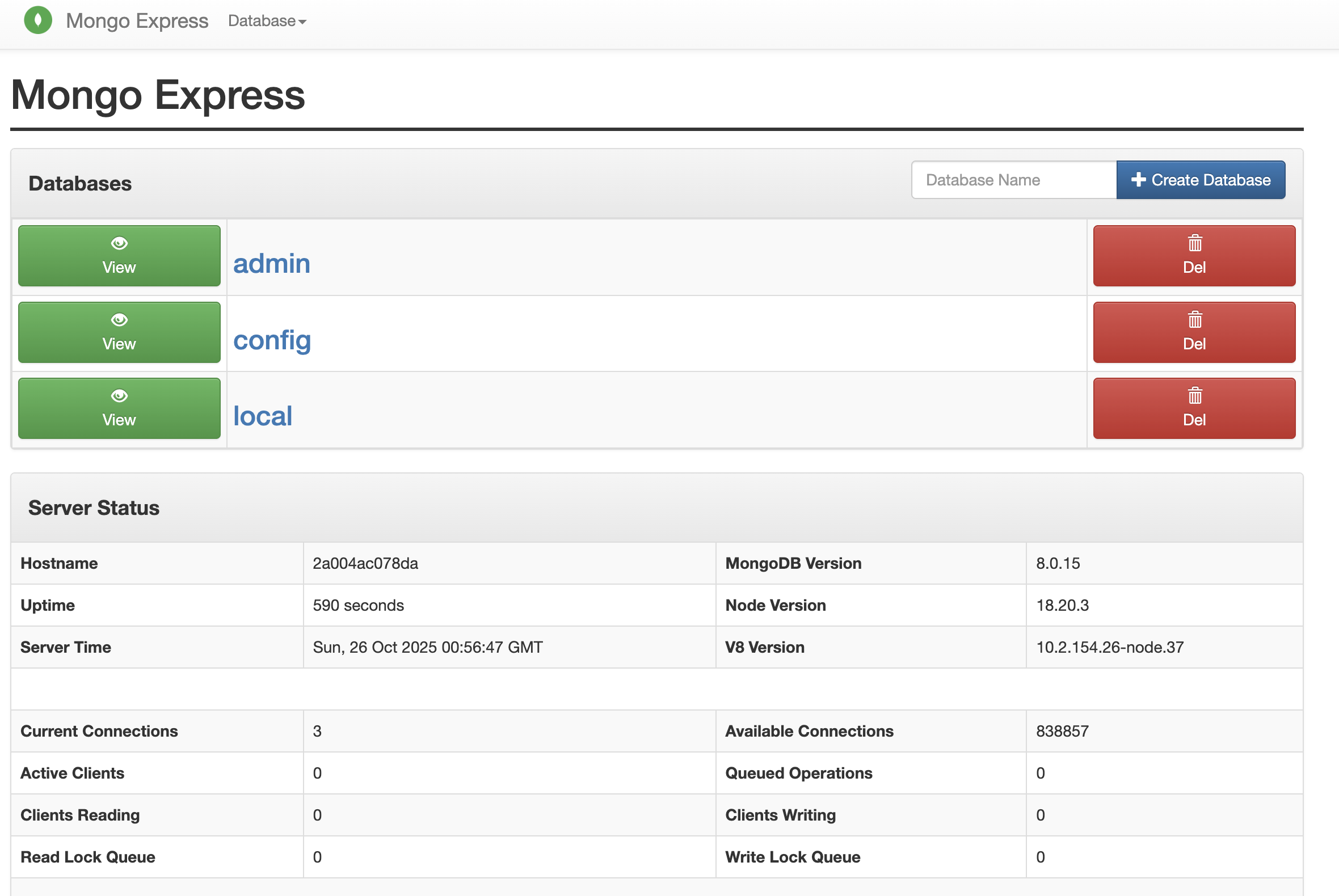

Because Forma agents are stateless, we need to run an external service (a database) to keep the memories. We have provided you with a file that defines the basic services that we use for developing Forma agents. These include:

- Arize Phoenix, for running evaluations (more on that on the Evaluation section)... we will not use this during this tutorial.

- Mongo, which is the actual datbase we will use during development

- Mongo Express, a nice user interface that we can use to audit what is stored in our development database.

These three services are defined and setup in the dev/dev-services.yaml file, which you can use by running the following:

# You need to have docker-compose installed

docker-compose -f ./dev/dev-services.yaml up

This will run the three services together, and a lot will be printed into the terminal.

📌 Note: You can run these services on the background or on a different terminal, so as to keep your Text editor's terminal clear.

If you check the contents of the ./dev/dev-services.yaml file, you should fined something like this. Check the comments for details of what each element does:

services:

# Used for LLM Evaluation and observability.

phoenix:

# Using the 'latest' version. It is recommended to choose one when

# deploying.

image: arizephoenix/phoenix:latest

ports:

- "6006:6006" # The UI and the Rest API is at localhost:6006

- "4317:4317" # The OpenTelemetry endpoint is at localhost:4317

# Used to emulate the Sessions database locally

mongo:

# the official MongoDB image

image: mongo

restart: always

environment:

# Authentication. Services connecting to this database

# need to provide these

MONGO_INITDB_ROOT_USERNAME: root

MONGO_INITDB_ROOT_PASSWORD: example

ports:

- "27017:27017"

mongo-express:

image: mongo-express

restart: always

ports:

# You can visit https://localhost:8081 to see the contents of the database

- 8081:8081

environment:

# Note the username and password we had defined in the mongo service

# Also this value should be the same as the SESSIONS_DB_URL in the

# .env file

ME_CONFIG_MONGODB_URL: mongodb://root:example@mongo:27017/

ME_CONFIG_BASICAUTH_ENABLED: false

# The username and passwords used to access the UI

ME_CONFIG_BASICAUTH_USERNAME: user

ME_CONFIG_BASICAUTH_PASSWORD: password

The most relevant elements here are:

- The user and password for the UI if the database service are

userandpassword, respectively - The mongo service has a username and password of

rootandexample, respectively. These shape theME_CONFIG_MONGODB_URL, which ismongodb://root:example@mongo:27017/. This is how you authenticate to this database. - Your

.envfile should have a variableSESSIONS_DB_URLthat matches this value (maybe with some extra settings; for example,mongodb://root:example@localhost:27017/?directConnection=true)

2. Open the UI for your database service

Go to http://localhost:8081 and use the user and password defined in the mongo-express configuration above: user and password, respectively.

You should see something like the following:

3. Configure Dory so it has a memory

This is very easy. Go to the runtime.yaml file, and add a persist_sessions: true field. This will tel Forma that every message and response should be stored in the database.

persist_sessions: true # <-- HERE!

client: sync

4. Chat and see your conversation in the database

Open a new Terminal tab or window, and run forma chat.

forma chat

📌 Note: In order for this to work, the database needs to be running.

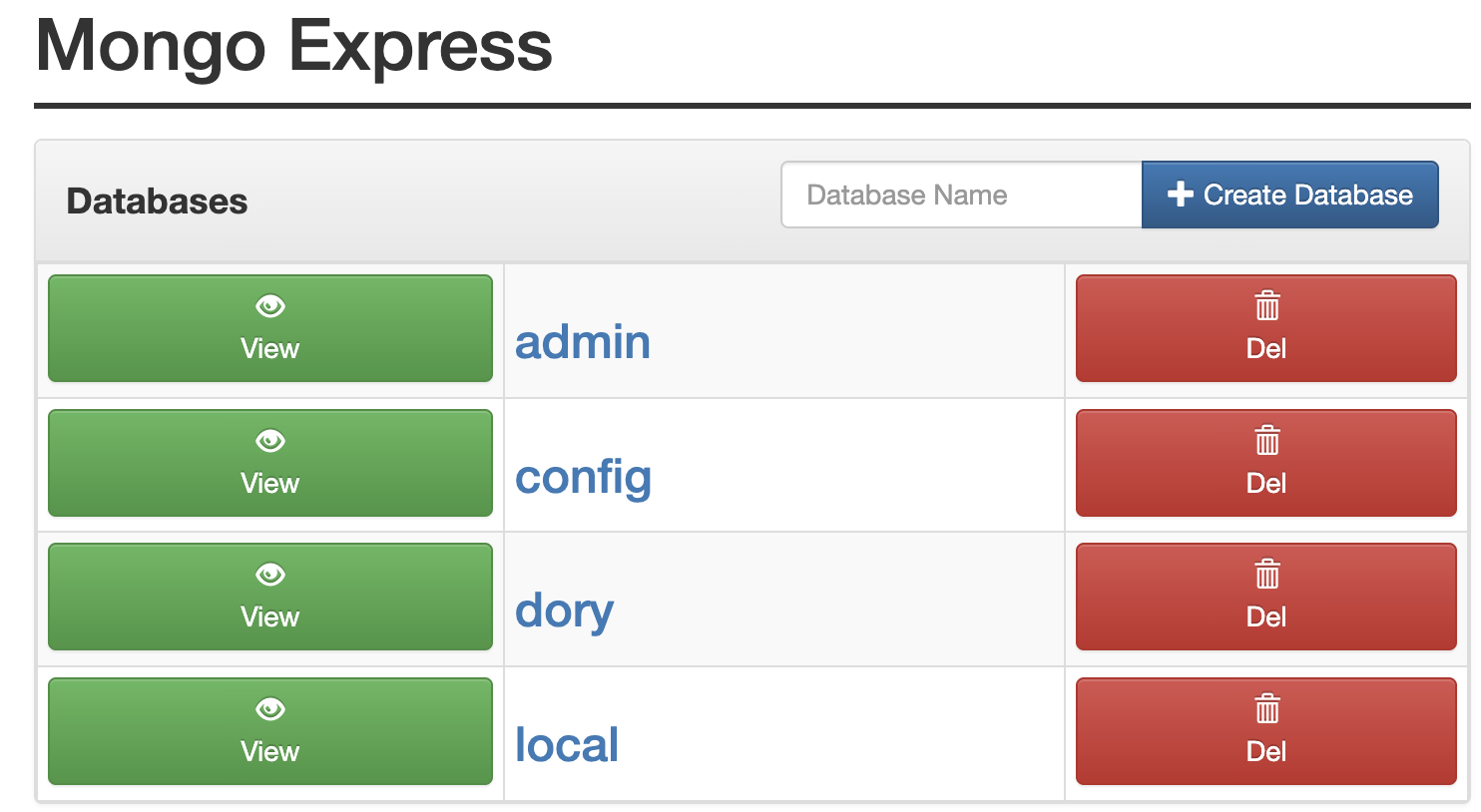

If you go to http://localhost:8081, you should now see a new database called dory; and within it, a collection called ai_agent_sessions. All the messages you send, as well as Dory's responses, will be stored in this database.

What is next? We scale! 🚀

Dory is a very simple AI agent. In fact, it can be considered an LLM Wrapper: it sends a message to an LLM, and it responds it. But from here, things will become more complex. We will add tools and workflows, allowing for a complex processing logic for each request.

The next section is about Observability, which will let us understand what happens on every request. Getting into the habit of reading observability reports is very important for two reasons:

- Because, once you deploy any AI agent, you do not get access to the code any more (this is true for nearly all frameworks, not just Forma). All you can do to monitor what is happening when your real users/clients interact with your agent is through observability.

- Because with Forma—contrary to other frameworks—your experience during development is nearly identical to your experience after deployment. That is to say, you do not get to see what the code is doing exactly, but you do get to see what happens within the agent thanks to observability.

See you in the next section!

Observability - Understanding how Forma agents process requests

At the moment, Dory is very simple: you send a message, it sends it to an LLM, and then it returns the answer. But it should not stay that way. In the next section we will start adding tools and workflows, which will let Dory process more sophisticated and complex tasks. This surfaces some challenges.

When AI Agents become more sophisticated, the trajectory of a request is no longer simple or necessarily predictable. Did Dory search for documents? or it answered just using its built-in knowledge? If we see an incorrect answer, how can we know what to fix? Where in the process did Dory make a mistake?

The answer to this is Observability: the ability to undertand a system's internal state by analyzing logs, traces and metrics. In other words, every time a Forma agent processes a request, it emits a bunch of information about what happened. This information is not meant to be read by the user, but by you; and the purpose is that you understand why answers are the way they are, and fix what needs to be fixed.

1. Start the Observability service

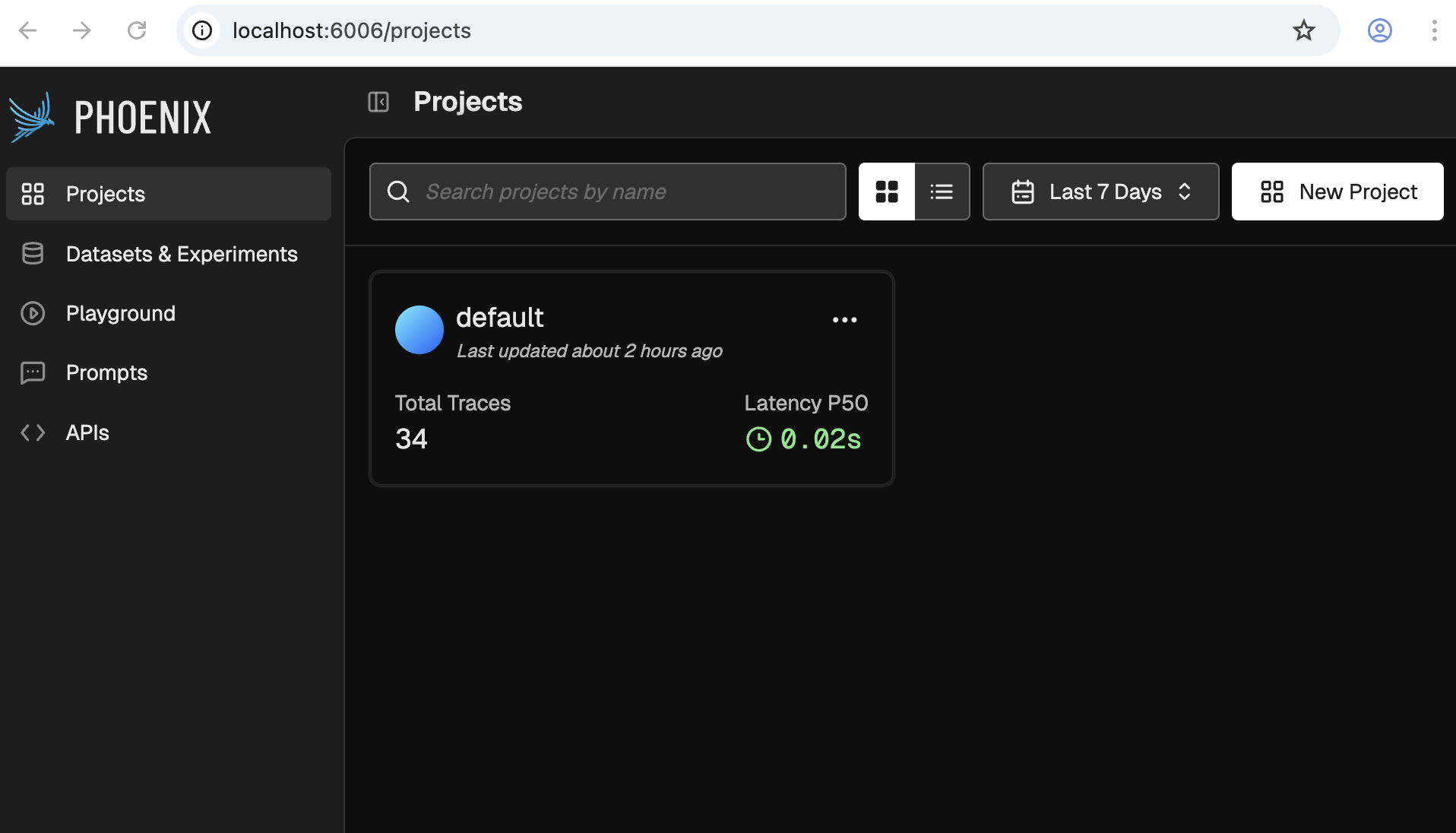

Just like with memory, Forma will not keep the logs itself. It sill send them "somewhere"; in this case, a service called Arize Phoenix. While in production you do not have to use it, it has proved to be easy to set up and quite powerful for what we need at the moment.

This service is also configured at the ./dev/dev-services.yaml file, which you will run using:

# You need to have docker-compose installed

docker-compose -f ./dev/dev-services.yaml up

❗Important: Depending on whether you stopped the services earlier or not, this might already be running.

2. Go to Phoenix's UI

As documented on the dev-services.yaml file, when you run it, Phoenix's UI will be available at http://localhost:6006. It should look something like the following:

3. Talk to your agent

Follow the same steps you followed in the first tutorial to chat with your agent and generate some traces.

forma chat

4. Check some traces

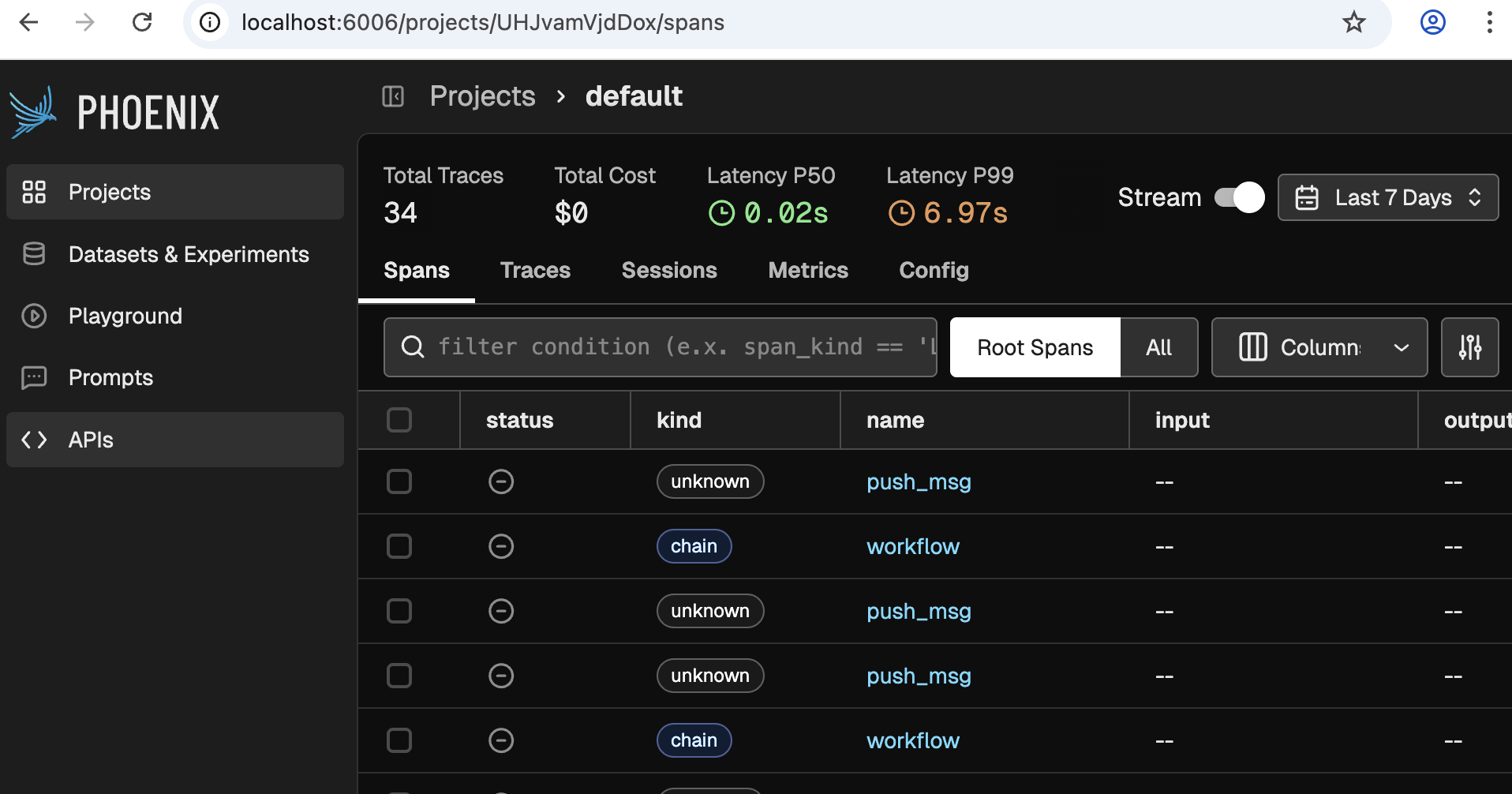

We will use Phoenix for other things as well, but for now lets focus on Traces. For this, click on the one and only project available. You should see something like this:

❗Note: If you do not see any traces, then you need to talk to your agent a bit more, or wait for a minute.

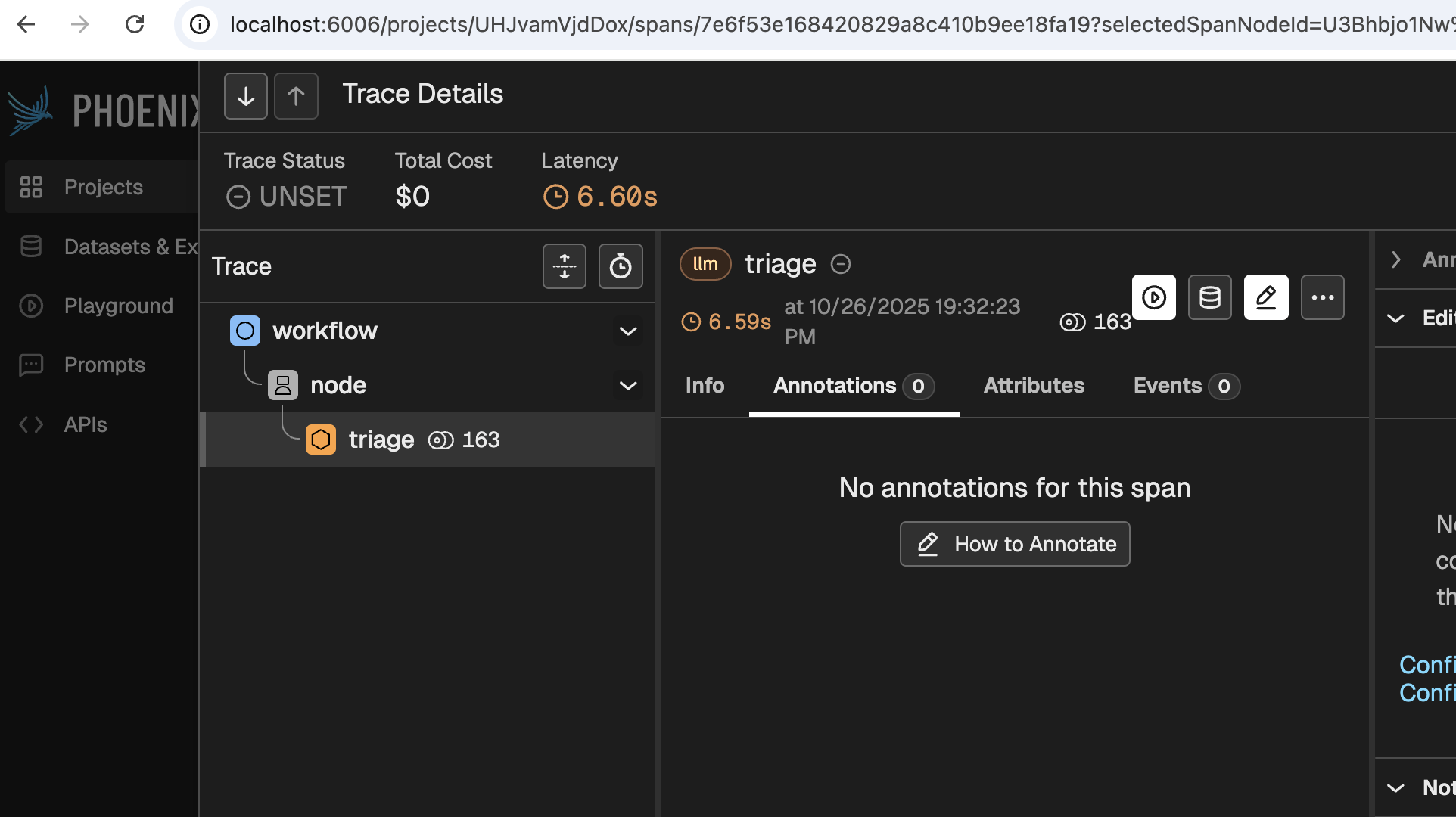

Now click on any of the rows that say 'workflow', and you will see something like the following come up:

Even before clicking any buttons, this screen reveals a bit of its power. This simple image tells you is that:

- A workflow received the request, and handed it over to a node

- The node 'Triaged' the request.

- 163 tokens were used

- It took 6.6 seconds to process the request

Digging deeper would provide you with much more and valuable information, such as:

- What model was used for each stage

- What was the system prompt used

- Which agent made the call (useful for when you have multiple agents deployed)

- Which user and session does the message correspond to

- Which node processed each thing

- How much of the 6.6 seconds were spent on each step of the process

- Why did the model stop anseering (did it finish? did it reach a limit? was the response flagged as offensive?)

- etc.

🚀 Now we can safely scale Dory

With Observability in place, we can scale Dory without having to guess what it is doing. We can inspect its behaviour, improve it, and ultimately deploy it so our users can interact with it. Let's start this scaling in the next section.

Offline Evaluations

As you might have read in the Evaluations explanation, evaluations are fundamental for developing trustworthy and reliable AI Agents. Forma encourages people to run evaluations often. This tutorial aims to explain the workflow that Forma has envisioned for Evaluations.

In simple terms, an evaluation is a straightforward concept:

- You have a sample question

- You ask that question to your agent

- You then use some metric or rubric to decide whether the answer is good enough or not, and why (this will sometimes require having a sample output)

Forma can help us with these three steps.

Note: Offline evaluations refer to the evaluations run before deploying an AI Agent. They are different from online or continuous evaluation in that they can use pre-established datasets to compare actual vs expected answers. Continuous evaluation is still in development.

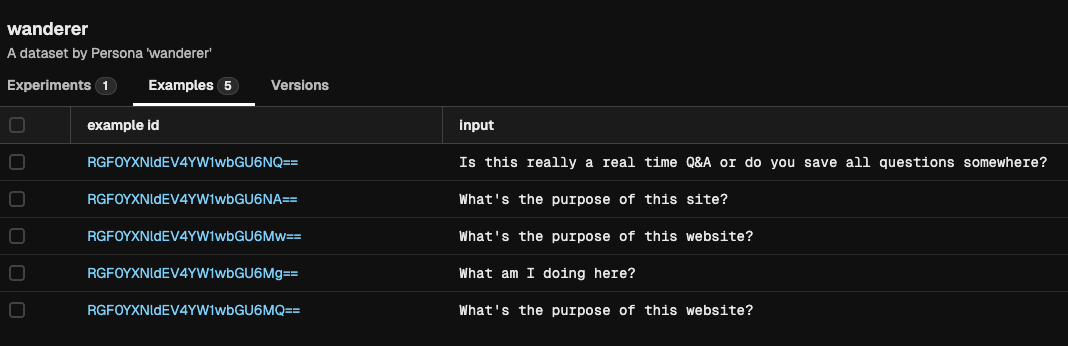

1. Use Personas to generate sample questions

For our current goal, Persona is no more than a roleplaying LLM. We tell an LLM "Pretend to be...", and they will send messages as if this was the case. We can leverage this for generating sample data.

AI Agents can be surprisingly good testers of other AI Agents as many of their flaws—like hallucinations and potential offensiveness—are not an issue when generating data. After all, users are allowed to be way more offensive and clueless than an AI Agent (because if the user does not understand quickly what the App is about, we blame the designer, not the user).

So, let's explore our first Persona. Open the file ./evals/personas/wanderer.yaml

llm:

provider: ollama

prompt: You are a person who just landed on a

website, and this website is just a chatbot. It is an

empty canvas with a textbar saying "ask me anything"

and a send button. When you get any message, just

respond with what you would ask.

As you can tell, we are asking an LLM (ran by Ollama, in this case) to pretend to be a human who just happens to have landed in front of a Chatbot. What would your first reaction be?

So, we can generate some sample data by running:

forma tester -f wanderer.yaml -n 5

You will notice that the wanderer.json file contains a list of objects which only have an input field. Each of these examples can also contain an expected_output field, which may be used for contrasting them with the responses from your agent.

2. Upload the dataset to Arize Phoenix

You are probably familiar by now with the fact that Forma depends on other services to operate properly. The same service we used in the previous tutorial—called Arize Phoenix—helps us manage our datasets and also visualise results in order to know whether our changes have improved the agent or not.

So, let's get that service running (if you haven't already)

# You need to have docker-compose installed

docker-compose -f ./dev/dev-services.yaml up

And then upload the dataset we just created to it.

forma dataset-upload -f ./evals/data/wanderer.json

If you now go to the Phoenix Service (in http://localhost:6006/), you should see your dataset:

3. Test your agent with that dataset

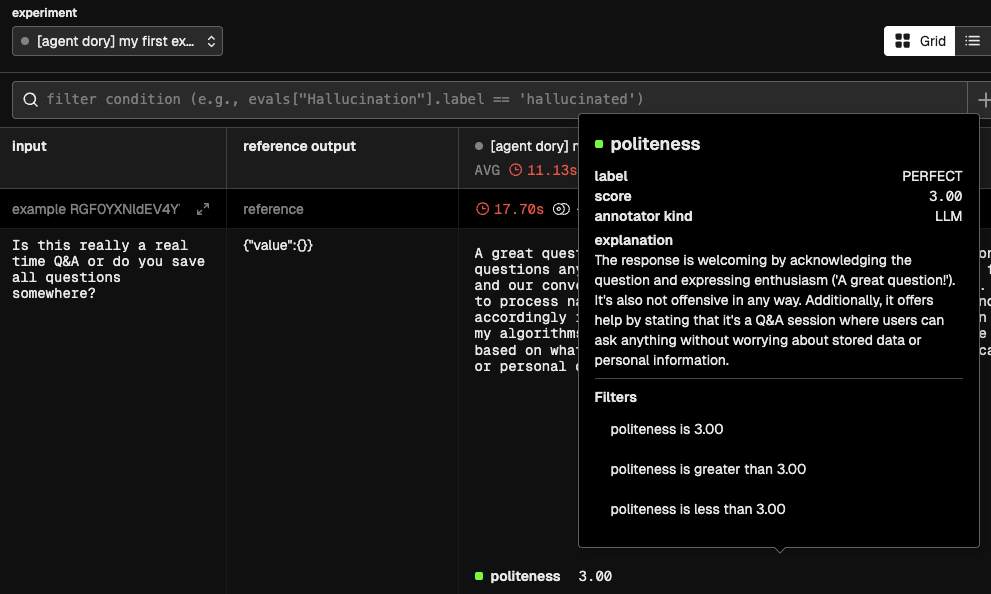

Before using our wanderer dataset for running evaluations, we need to define this evaluation. We can define evaluations in different sub-components of the agent of the agent in order to perform more focused assessments.

In this case, we will evaluate the agent itself. This comes out of the box when you run forma init.

# Define evaluations down here

evals:

- dataset: wanderer

metrics:

- template: $../evals/metrics/politeness.md

llm:

provider: ollama

You can go and check the politeness.md file, and you will notice that it uses the same templating system we were using earlier (see below to learn about the valid fields for evaluation templates).

With this in place, we can now run:

forma eval -e "my first experiment"

If you now go to the Phoenix Service (in http://localhost:6006/), you should see the results of the evaluation, including the metrics:

❓What is happening under the hood?

- The first step is to ask your Agent, Node or Workflow that will be evaluated to produce an answer. This is called the

actual_output. - Having generated the

actual_output, the metric template will be rendered. This template can contain only three fields:actual_output- Which will be replaced with the answer of the agent, node or other.input- Which will be replaced by the input, extracted from the dataset.expected_output- An optional value, that would also be extracted from the example.

- This new rendered template will be sent to an LLM of your choice, which will be asked to generate:

label- A single-word verbal equivalent of the score (e.g., 'Good', 'Bad', 'Hallucination'). Base this value on the instructions providedscore- The numerical value reflecting the quality of the evaluation, assigned as per the instructionsexplanation- A verbal explanation for the score and labels given

Workflows

As it was explained earlier, workflows are a mechanism to break down large tasks into smaller ones. The value of this is that our system instructions will be shorter and more focused, meaning that LLMs will behave in a more predictable manner. Let's revisit the original example:

Example

We can break down this:

Take this academic article and produce a blog post for 5th-graders, in Spanish. The content of the blog post should include a brief introduction/motivation, a brief explanation of the methodology, and an emphasis in the results and implications. Your target audience is 5th graders, so do not use acronyms or jargon. Use examples to make it more relatable.Into these more focused steps

Create a list containing the (1) motivation; (2) methodology; (3) results and implications from the following paperWrite a blog post for 5th graders based on the following summary points. Explain the motivation, outline the methodology and emphasise the results and implications. Use examples to make it more relatable.Translate this blog post into Spanish. Keep the tone and length of the post.

I guess the motivation is clear enough. Let's get started.

1. Let's create a workflow

A workflow is a series of nodes that might or might not depend on each other. The way to express dependency is through Templates. This means that the system prompt of one node can reference another node (using its ID), indicating two things that the output of the referenced node should be injected into the system prompt if this node.

It will become clearer with an example.

Step 1 - Summarize (or make up) an article

For the sake of an example, instead of asking an LLM to summarize an article, let's ask it to invent one. Create a file called src/prompts/summarizer.md, which will contain the instructions for making up a study summary.

You are an expert con artist. Your job is to take any topic the

user gives you, and pretend that you performed a study about that

topic. You should respond by stating:

1. A title for the published reports

2. The motivation for the study

3. The methodology

4. And the results and implications

You should respond with just that information, without adding

ANY comments such as "here is the study".

Be brief.

Now we need to update Dory so that it uses this prompt.

id: dory

persist_sessions: true

start:

nodes:

- id: study-summary # We need to assign an ID

llm:

provider: ollama

# You can delete the original "agent_prompt.md" file, if you want

system_prompt: $/prompts/summarizer.md

So far, nothing much has changed. You have the same Dory but with different instructions. You can talk to Dory and it will do as we instructed.

📌 Note: We need to assign an ID, which we will use to reference the output of this node in subsequent steps.

Step 2 - Write a blog

Create a file called src/prompts/blogger.md, which will contain the instructions for the new node, in charge of writing blog posts.

Write a blog post for 5th graders based on the study summarized below.

Explain the motivation, outline the methodology and emphasise the results

and implications. Use examples to make it more relatable.

Keep it brief, aim for 2 paragraphs.

# Study

{{study-summary}}

📌 Note: Forma will replace the

{{study-summary}}field by the output of the node whose id isstudy-summary.

Now we need to update Dory so that it uses this prompt.

id: dory

persist_sessions: true

start:

# Because we have multiple nodes, we need to

# specify which one is the output. Multiple values

# are also alloed

output: blog-post # <-- Update this

nodes:

- id: study-summary

llm:

provider: ollama

system_prompt: $/prompts/summarizer.md

# Add the new step

- id: blog-post

llm:

provider: ollama

system_prompt: $/prompts/blogger.md

Feel free to chat to dory, and it should be writing blog posts

Step 3 - Translate

Create a file called src/prompts/translator.md, which will contain the instructions for the translation step.

Translate the following blog post into Spanish. Keep the tone and length of the post.

# Blog post

{{blog-post}}

Again, we need to update Dory so that it uses this prompt.

id: dory

persist_sessions: true

start:

output: translation

nodes:

- id: study-summary # Update the output

llm:

provider: ollama

system_prompt: $/prompts/summarizer.md

- id: blog-post

llm:

provider: ollama

system_prompt: $/prompts/blogger.md

# Add the new step

- id: translation

llm:

provider: ollama

system_prompt: $/prompts/translator.md

2. Lets try it

Let's start our development services and talk to Dory

- On one terminal window or tab, run

docker-compose -f ./dev/dev-services.yaml up - On a different terminal window or tab, start the chat by running

forma chat

Then, propose a research topic:

The effect of Ant overpopulation over people's sausage consumption

The results will vary, but in my case, I got the following (predictably stupid) result:

La saga de los chorizos: cómo un problema picudo cambió las costumbres del asado

Imagina que estás realizando un asado en el jardín con amigos y familiares. Los chorizos salpiconados en la parrilla hueven increíblemente bien, pero espera – ¿crees que esos deliciosos links pueden estar influenciados por los invitados no deseados que se acampan a tus pies? Un estudio fascinante sugiere que sí, es posible! Los investigadores descubrieron que cuando las poblaciones de hormigas explotan en áreas suburbanas, cambian los hábitos alimenticios de las personas. Pero no te preocupes, ¡no es porque las hormigas se estén robando nuestros refrigerios (aunque parecen estar interesadas en los dulces)!

Según el estudio, las comunidades con infestaciones de hormigas más intensas dejaban de comer menos chorizos y más vegetales. De hecho, los hogares con cargas de hormigas vieron caer un 27% en la consumo de chorizos. Esto puede parecer un poco raro, pero piensa en ello así: tener muchas hormigas alrededor podría hacer que las personas se sientan más conscientes del medio ambiente y les estimule a cocinar comidas más saludables. ¿Quién sabe? Tal vez esas hormigas molestarte estén inspirando a nuestros padres (o nosotros!) para tomar mejor decisiones sobre la comida! ¡Eso es lo que llamo un giro emocionante en las costumbres del asado de siempre!

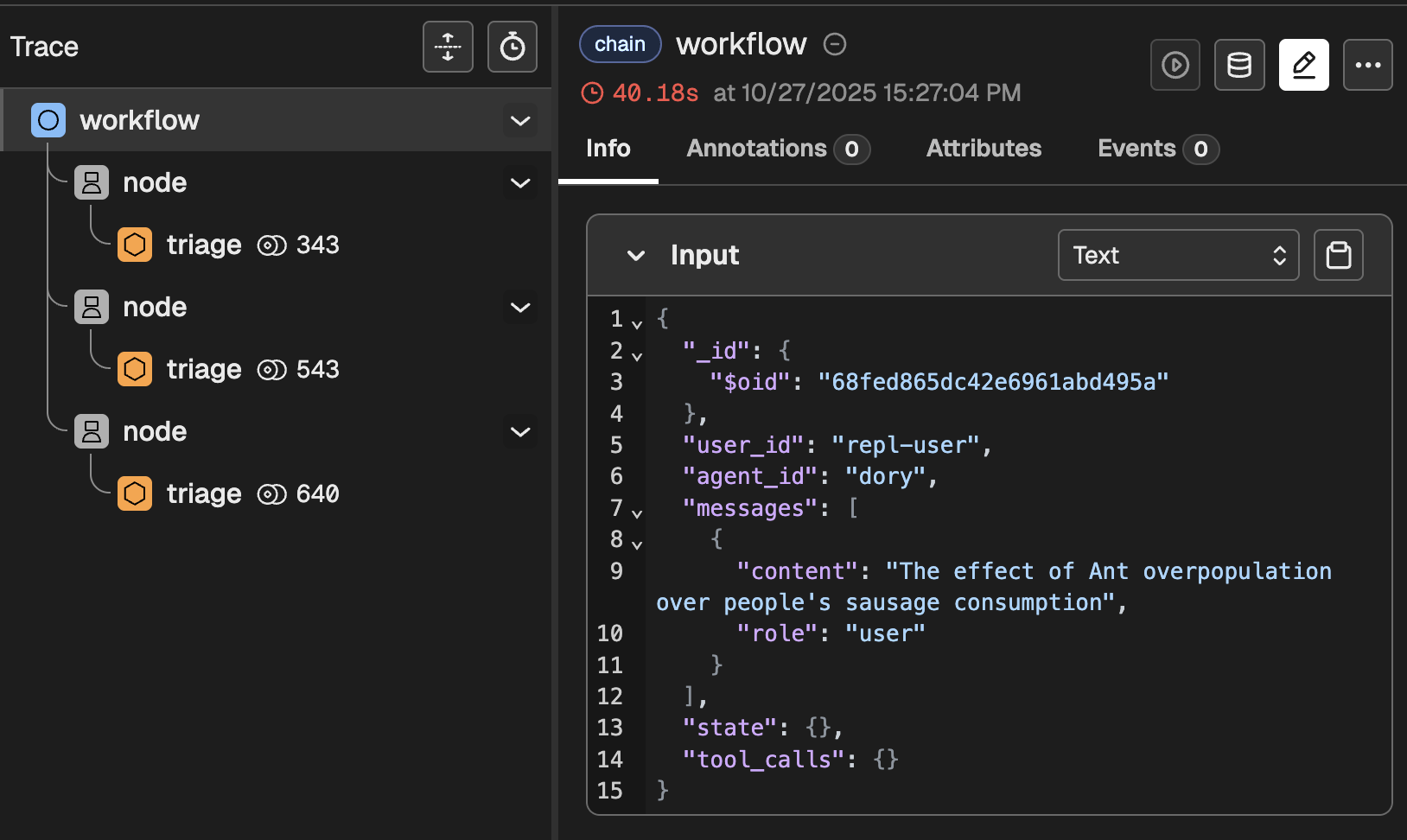

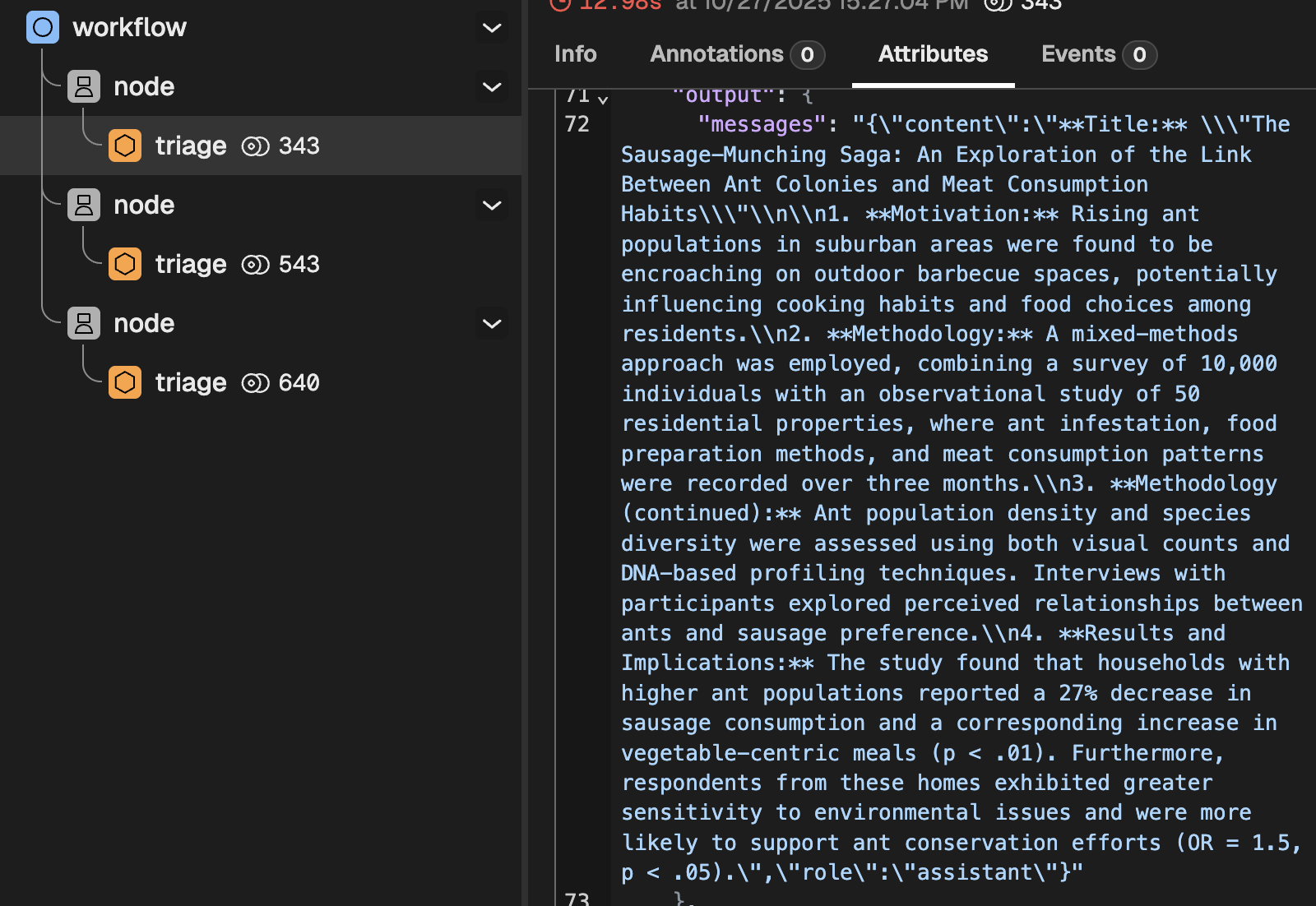

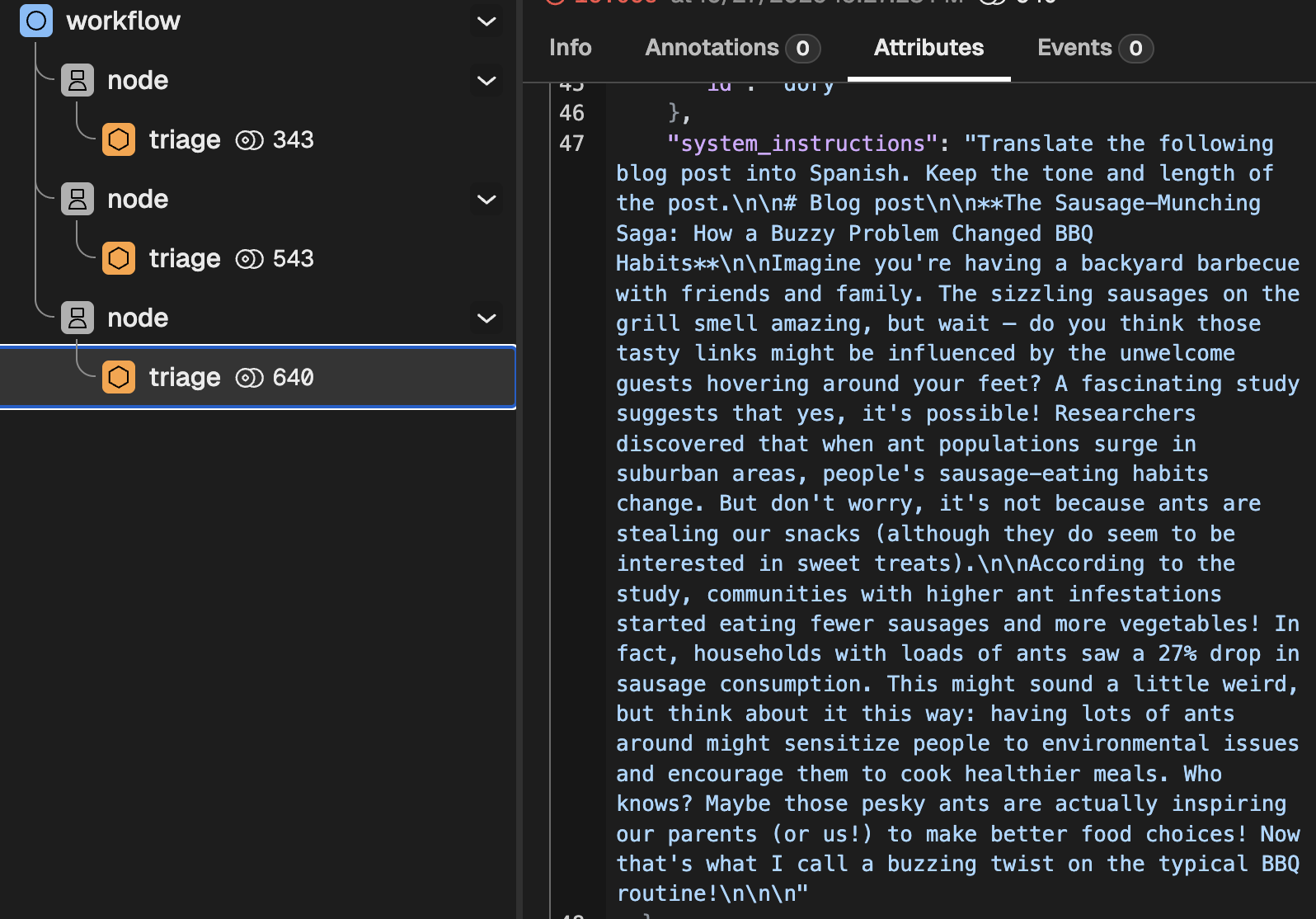

3. Check what happened

Go to http://localhost:6006/ and check the trace named workflow.

You should see something like this

This clearly indicates that, as expected, three nodes ran in series. If you dig a bit deeper, you will find things like:

- The intermediate results produced by each node

- The rendered system instructions

📌 Up next: templates

This tutorial introduced templates. Before we jump into Tools, we need to dig a bit deeper into that. Go to the next section to learn all about them.

Templates

Templates work by replacing a Template Field with the value of a variable with a certain Field name. And it is very simple:

If you have a variable some-variable=2, and a template saying I have {{some-variable}} apples, rendering the template will give you I have 2 apples. That is all, simple and predictable.

There are two main situations where templates become very useful:

- Using dynamically generated content (e.g., the response of one agent) as part of the input to another Agent or LLM.

- Defining reusable blocks of instructions to avoid writing the same thing multiple times

Forma only allows for the first use case, and does not support reusable blocks of instructions (more on this later).

Templates for dynamically generated content

Templates can include fields for dynamically generated content. For example, you can have a node called descriptor that produces a short product description; and then a node pr-expert in charge of adapting it so that it matches the tone and style of your company.

Imagine your descriptor is given the following isntructions.

Users will send you a picture. Your job is to describe the

product in such picture.

And then your pr-expert will be given the following instructions:

You are a PR expert, in charge of improving product descriptions

so that they match the following guidelines:

1. No emojis

2. Always describe in a positive tone

We need you to improve the following description:

{{descriptor}}

Naming nodes and fields

Template fields are identified by variable names. While not very limiting, these names need to follow a few simple rules:

- Must start with a letter (a–z or A–Z) or an underscore (_).

- Can contain letters, digits, underscores (_), and dashes (-).

- Cannot start with a digit.

- Cannot contain spaces, dots, or special symbols (like $, %, /, .).

Here are some examples:

| Variable Name | Valid | Reason |

|---|---|---|

world | ✅ | Simple lowercase name |

_user | ✅ | Starts with underscore |

big-world | ✅ | Dashes are allowed |

user_123 | ✅ | Numbers allowed (not at start) |

HelloWorld | ✅ | Uppercase letters allowed |

1world | ❌ | Cannot start with a digit |

user name | ❌ | Spaces not allowed |

user.name | ❌ | Dots not allowed |

user/name | ❌ | Slashes not allowed |

$user | ❌ | $ not allowed |

Describing fields

Sometimes it is very useful to communicate to people or LLMs what the different fields are supposed to be. For instance, when executing a Workflow as a tool, this Workflow is given an input_prompt. This is a template that will be rendered and used as the first message received by said workflow, and an LLM will need to dynamically generate all the variables needed to fully render this template. Giving this LLM some information about what the fields are makes them behave much more reliably.

Fields can be fully described within a template using the following syntax:

{{name:optional-type "optional-description"}}

Where:

nameis the mandatory field name, as described above.typetells us what kind of value is expected (e.g., an int? float? string?). If not given, it is assumed to be a string.descriptionis just some free text that will tell the LLM or the user what this is meant to be

The following are the valid types:

| Type | Description | Examples |

|---|---|---|

string | Any text text | "car", "bananas with syrup" |

number | Any number | 1, 31, 99.2123 |

int or integer | An integer number | 2, 212 |

bool or boolean | True or false | true, false |

The following are examples of valid and invalid fields

| Template Expression | Valid | Reason | Type |

|---|---|---|---|

{{ world }} | ✅ | Basic variable, with no type. Defaults to string. | string |

{{ name:string }} | ✅ | Includes a type | string |

{{ age : int }} | ✅ | includes valid type | int |

{{ name:string "Full name" }} | ✅ | Includes optional description | string |

{{ is_big:bool "Is larger than 3 elephants?" }} | ✅ | Underscore prefix and description | boolean |

{{ 1world }} | ❌ | Invalid name | -- |

{{ user:"string" }} | ❌ | Type should not be quoted | -- |

{{ world: string "Mismatched quotes }} | ❌ | Missing closing quote in description | -- |

{{ age : ints }} | ❌ | Invalid type | -- |

❌ Why not reuse blocks of instructions

Some frameworks have a very powerful prompt templating system, which allows users to reuse pieces of prompts in several places. Forma does not really do that, on purpose. The reason is that this makes it harder to audit AI Agents. Take the following code as an example:

import llm

from prompts import tone_and_style, safety

prompt = f"""

You are a customer service agent. Your job is to help customers

achieve their goal.

{tone_and_style}

{safety}

"""

llm.chat(prompt, "hello!")

This syntax is appealing. It looks neat and modular. And yet, ask yourself:

- What will be the exact prompt that the LLM will receive?

- Do you know if the

tone_and_styleand thesafetyblocks have a header? - Are they both written in Markdown?

- Do they have blank lines at the end?

- Do they start with a "You are a..." section?

You can go and check these files, of course, but that introduces friction and the risk of having incoherent overall instructions.

In summary, Forma avoids this because:

- Prompts are not modular code — they are natural language instructions that need to make sense as a whole.

- The final rendered prompt must always be auditable, reproducible, and human-readable.

- Reusable “blocks” create hidden dependencies that make debugging, auditing, and comparing behavior much harder.

Introduction to Tools

Tools are the instruments that allow an AI Agent to do things, rather than just read and write text. By giving an Agent access to tools, you effectively transform it into a user interface. You can now use natural language to send emails, check the time, read a database and summarise the results, and much more. Depending on how you design your agent, you might want to give it permissions to do things without you asking (e.g., as a reaction to specific pictures being uploaded).

Before jumping into a hands-on tutorial, you need to remember the following concepts:

- A tool is just a normal programming function that receives certain arguments, and returns a value.

- LLMs cannot call tools, they can only decide which function should be called, and what the arguments should be. This means that we can add additional safeguards before calling a tool (e.g., role-based permissions)

- The decision to call a tool is based on both its name and description. We need to give LLMs this information so that they decide when and if a tool should be used.

- LLMs do not know what happens within the tools, and they do not need to. If a model chooses to use the

get_product_descriptiontool, that function could talk to another agent, fetch information from a database, or even return some mock/fake data. The LLM only needs to know what the tool achieves, not how. - Calling tools is optional, meaning that there is no guarantee that a specific tool will be ran (this is the opposite of the case of Worlfkows, where all nodes are guaranteed to run)

Tools in Forma

By design, Forma does not let you define arbitrary functions directly as tools. Instead, it exposes built-in connectors that act as bridges to your actual logic. For instance, you can use an open-api-tool to make HTTP requests to any endpoint. This is perfect for connecting to your own APIs, cloud functions, or serverles actions.

As mentioned earlier, an LLM needs three piecs of information to decide when and how to use a tool:

name- The name of the tooldescription- A description of the tooltool- The tool itself, which will require:type- the Type of tool (there is a finite number of tool types)- ... Other arguments specific to the tool.

Within the tool itself, Forma always requires type, which lets it know which specific tool you are defining.

Tutorial time - Let's define our first tool

So far, our version of Dory (from the workflows tutorial) can only do one thing: It receives a research topic, and produces a blog post in Spanish. But we know Dory can do more! So let's turn this functionality into a tool and give Dory the choice to use it or now.

We use a workflow tool for this. A workflow tool executes a workflow just like before, except that it does not receive the conversation context. Its output depends exclusively on the arguments passed to it.

But, if it does not receive the users' messages, what does the workflow tool respond to? Well, it receives a single message with whatever you define in the input_prompt field. This will become clearer once you follow the tutorial and then check the traces and logs.

1. Place the main workflow into its own file

While you could just extend our agent.yaml file, it will easily become hard to read. Let's avoid this by creating a file called ./src/tools/generate_blog_post.yaml. In this file we will pass the information we mentioned earlier: name and description of the tool, and the tool itself.

# ./src/tools/generate_blog_post.yaml

name: write-blog-post

description: writes great blog posts about research topics that the user wants, in Spanish

tool:

# Specify the tool and the arguments

type: workflow

input_prompt: write a blog post about '{{research_topic:string "the research topic chosen by the user"}}.'

## From here and on, this is the exact same workflow we had before

output: translation

nodes:

- id: study-summary

llm:

provider: ollama

system_prompt: $/prompts/summarizer.md

- id: blog-post

llm:

provider: ollama

system_prompt: $/prompts/blogger.md

- id: translation

llm:

provider: ollama

system_prompt: $/prompts/translator.md

As you can see, this file contains almost the exact same workflow we had before, with the addition of two fields:

type- This indicates to Forma what kind of tool you are defining. The LLMs are not aware of this field and do not use it.input_prompt- This specifies the message that this workflow will receive. It uses the Template syntax explained earlier.

💡 Example: In this case, if the LLM decides to call this tool using the research topic "gravity in the star wars universe", the message that this workflow will receive (and respond to) will be "write a blog post about 'gravity in the star wars universe'."

2. Register the tool with Dory

We will go back to our simplest Dory (although, with memory), and we will add a tool to its node.

id: dory

persist_sessions: true

start:

nodes:

- llm:

provider: ollama

system_prompt: You are a helpful assistant

tools: # <-- Tools to the Node (not workflow, agent, or llm)

- $/tools/generate_blog_post.yaml # <- Point to our newly created file

With this, Dory no longer has to produce a blog post every time. It can now choose to use this tool when appropriate.

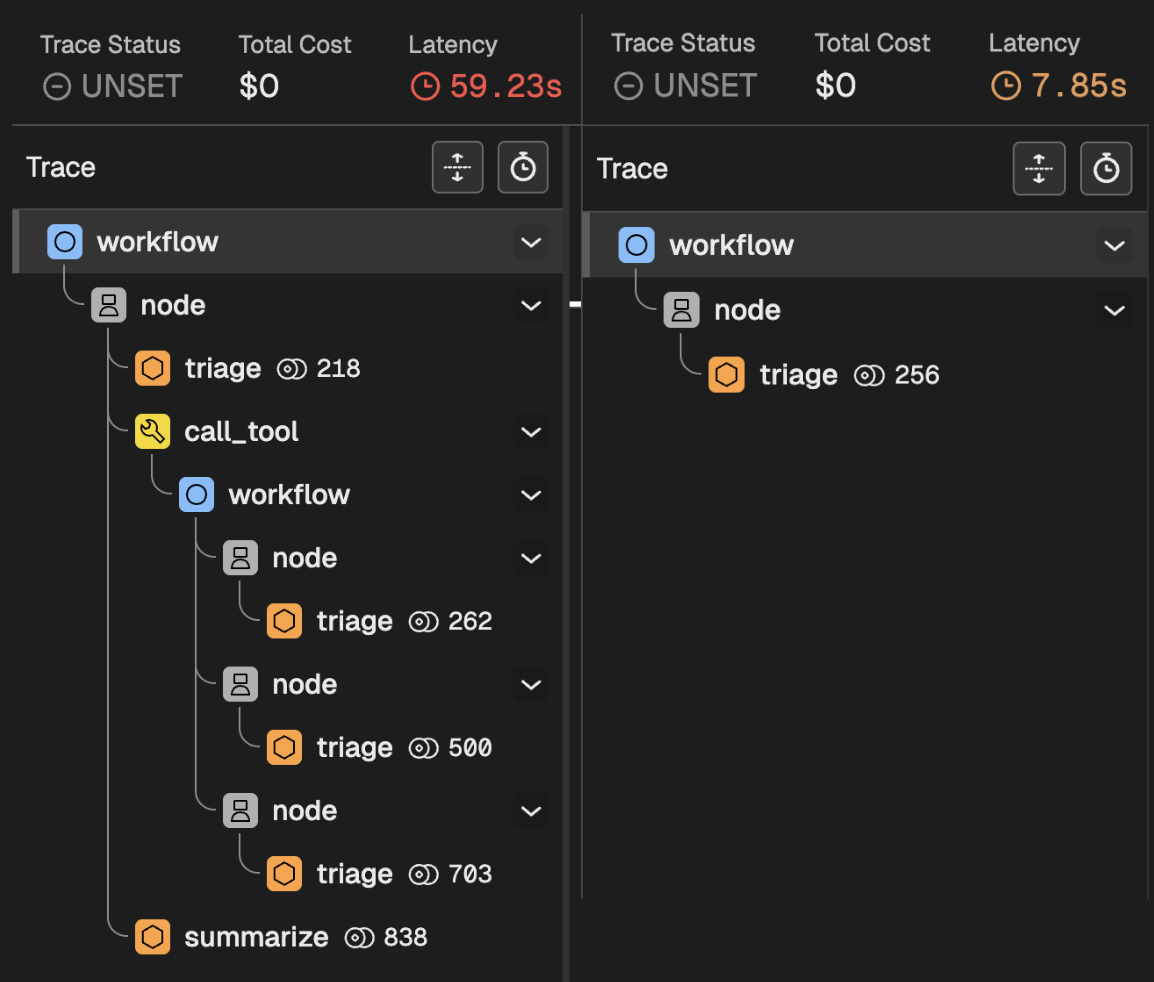

3. Run it and check the traces

Chat to Dory! First greet it, then ask for a research blog post. You should notice two completely different behaviors (as reflected in the image below, showing the traces).

When you greet Dory

When you greet Dory:

- Your message enters the main workflow

- The message reaches the first and only node

- Nodes are predictable: They first Triage calling an LLM, who whether whether to call a tool.

- The LLM decided not to call a tool, and just respond quickly.

When you ask for a research blog post

When you ask for a blog post:

- Your message enters the main workflow

- The message reaches the first and only node

- The node Triages making a request to the LLM.

- The LLM decides to call the Workflow tool, which means:

- Your message enters the workflow of the tool

- The three internal nodes are executed

- The output is returned to Dory

- Dory takes the result of the Workflow tool, and writes a response to you

Conclusion

What you are seeing here is a key element of sophisticated AI Agents in Forma. While woorkflows let you break down big tasks into several smaller and more focused ones, tools let AI Agents decide what to do. On top of letting agents interact with external systems, tools are also routers that Agents can use to process requests in completely different ways depending on the input and its instruction.

Available tools

Check the list of tools in the Reference Documentation.

Deep dive into tools

The following contents assume you have read the Getting Started section. If not, please do start there.

Role Based Access

Role-based access is a feature that allows you to let some users do some things that other users cannot do. This is already very common on web applications, where developers assign Roles to users for cost, security or other concerns. For instance, you can have a role called free-tier-user which grants permissions to a subset of features. Similarly, an admin role might let users read/write data from a datbase that is inaccessible by other users. Forma allows you to implement Role-based-access for the tools in your agent.

Note: as mentioned in the architecture section, Forma will not authenticate your users. A different component within your application should make sure the permissions and user ID given to forma are real.

How it is implemented

Forma will handle the Role-based acess in the following way:

- You provide the roles of the user in the header of the request, follows:

curl -H 'X-User-Roles: paid-user,admin' .... (If no roles are provided, the user is assumed to have NO roles and thus they won't have access to any tool that is restricted.) - Forma parses those, and—at every triage-stage of each node, at run time—will select the tools available for users with the specified roles.

- A request is made to the LLM including only this subset of tools.

- The path follows as usual

This approach intends to eliminate the chances of an LLM calling a tool that should not be allowed to the user. An alternative could have been, for example, to add an instruction saying something like "this user is in the Free tier, they do not have write access", but this is not reliable enough for a security-sensitive application.

Another consequence of this is that, if a user intents to call a function they have no access to (e.g., a free-user asking for a paid feature), there will be no error. Forma will simply eliminate the restricted tools when asking the LLM to choose an action, and the LLM will not even be aware that such a tool exists.

Limiting access to tools

Restricting the use of a tool to specific roles is as simple as passing the just_for field to it. Let's remember the blog-writer agent we developed earlier.

name: write-blog-post

description: writes great blog posts about research topics that the user wants, in Spanish

just_for: # Add this!

- blog-writer # And this!

- admin # And this!

tool:

# ... the rest of your tool

The above means that a user with EITHER blog-writer ORadmin roles will be able to call this tool. Other users will not.

Indicating the user's roles in production

To indicate this you would make a request as follows:

curl -X POST -i http://localhost:8080/v1/chat \

-H "Authorization: Bearer key-not-for-production" \

-H "X-User-ID: user-id" \

-H "X-Session-ID: session-id" \

-H "X-User-Roles: admin, another-role" \

-H "Content-Type: application/json" \

-d '{"content":"write me a blog post, please!"}'

Note: again, Forma will not validate neither the user ID nor the roles. You should have a separate service do this before calling Forma.

Impersonating roles during development

When running forma serve, you are emulating a production server and thus you should pass the roles using the X-User-Roles header.

When using forma chat, on the contrary, you essentially "start a session as a user with roles". You do this by passing the flag --roles role-1,role-2.

More on Evals

The intro to evaluations covered the basics of evaluations in Forma. To summarise, it consists of:

- Create a dataset (the

forma testercommand can help create it) - Upload the dataset to Phoenix (for now, the only supported eval framework) using the

forma dataset-uploadcommand - Run evaluations using the

forma evalcommand.

There is more to evaluations than that, however. This section provides more details on Evaluations, including creation and maintenance of datasets.

Arize Phoenix

Arize Phoenix is an open-source llm tracing and evaluation project. Forma does not make use of all of its features, but it heavily relies on it nonetheless. Specifically, Phoenix will:

- Store datasets

- Allow you to edit and extend datasets

- Visualize the results of evaluations

- Store different experiments

📌 Note that, in the future, the plan is to recommend developers to share deployed version of Phoenix so that everyone in the team has access to the latest datasets; and that they are used for evaluation purposes in Devops workflows. For now, we use it locally.

Datasets

A dataset is no more than a set of prompts that will be used to test and assess the quality of an AI Agent.

Datasets have a name, a description, and the data with which to test. These can be created manually, or with the help of the forma tester command. This is the short user manual:

Asks the 'personas' (aka, virtual testers) to create a dataset of questions

Usage: forma tester [OPTIONS] --file <FILE>

Options:

-p, --path <PATH> The path where the 'personas' descriptions are kept [default: ./evals/personas]

-f, --file <FILE> The name of the 'persona' file within the given path

-o, --output-path <OUTPUT_PATH> The relative path of the output dataset file [default: ./evals/data]

-n, --n <N> The number of questions to try [default: 5]

-h, --help Print help

For instance, let's examine the dataset we created in the intro to evaluations, using the command

forma tester -f wanderer.yaml -n 5